Machine Learning Average Loss

A mathematical derivation of the above formula can be found in Quantile Regression article in WikiWand. Higher loss is the worsebad prediction for any model.

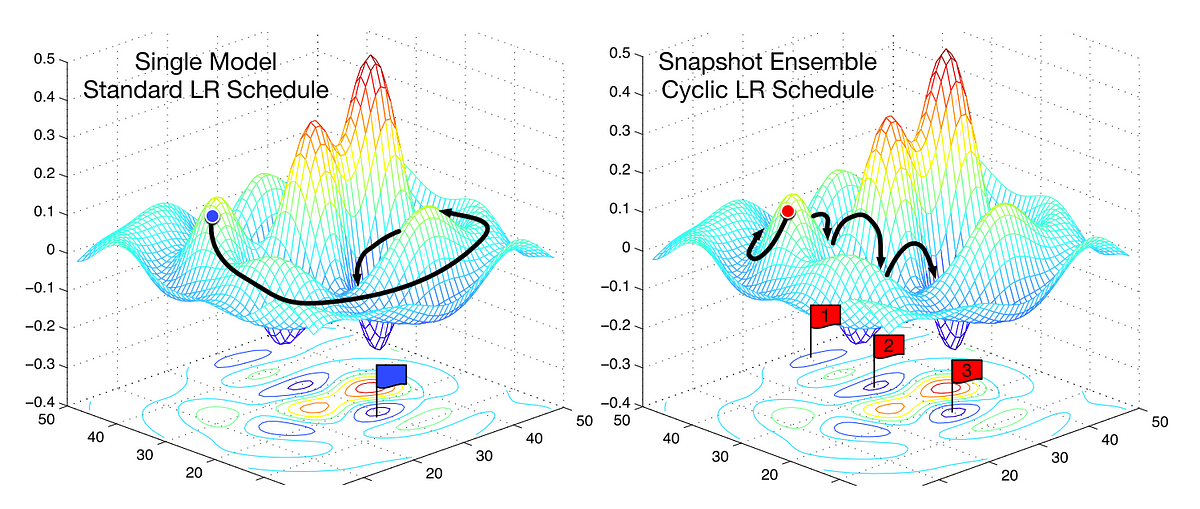

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

Loss is the penalty for a bad prediction.

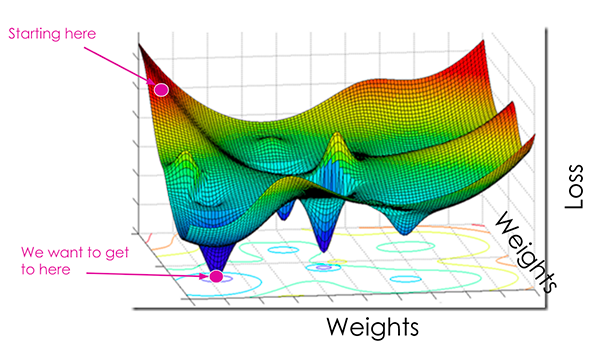

Machine learning average loss. Gradually with the help of some optimization function loss function learns to reduce the error in prediction. This computed difference from the loss functions such as Regression Loss Binary Classification and Multiclass Classification loss. If you are interested in an intuitive explanation read the following section.

The accuracy of a model is usually determined after the model parameters are learned and fixed and no learning is taking place. Ideally one would expect the reduction of loss after each or several iterations. W X T X 1 X T Y.

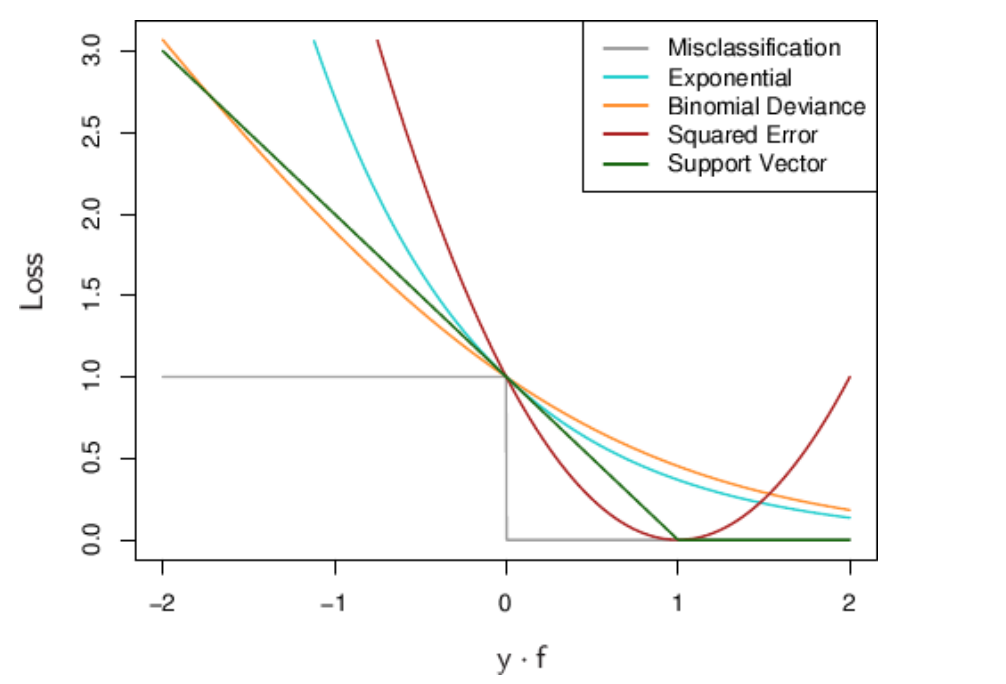

This is the loss function used in multinomial logistic regression and extensions of it such as neural networks defined as the negative log-likelihood of the. Its meaning is to take log the probability value after softmax and add the probability value of the correct answer to the average. While complicated log loss is an essential metric used for applied machine learning and is widely used for binary classifiers.

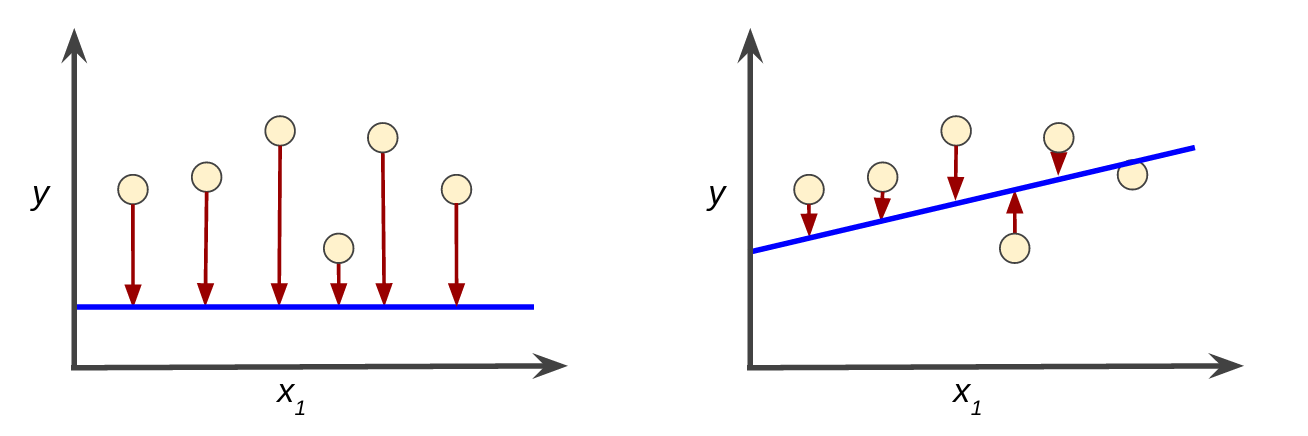

In Machine learning the loss function is determined as the difference between the actual output and the predicted output from the model for the single training example while the average of the loss function for all the training example is termed as the cost function. Loss value implies how well or poorly a certain model behaves after each iteration of optimization. Machines learn by means of a loss function.

Lyip yi maxqyip yi q 1 yip yi For a set of predictions the loss will be its average. Hence the ratio is exactly the batch_size argument of your input_fn. Otherwise the loss is greater.

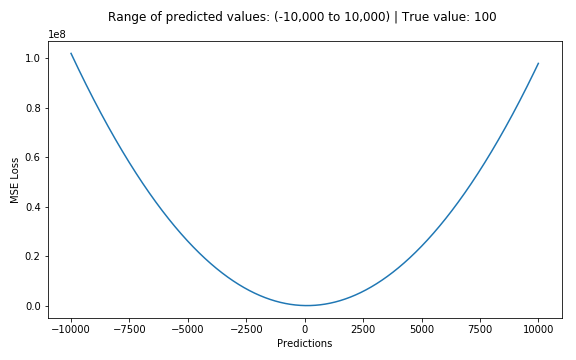

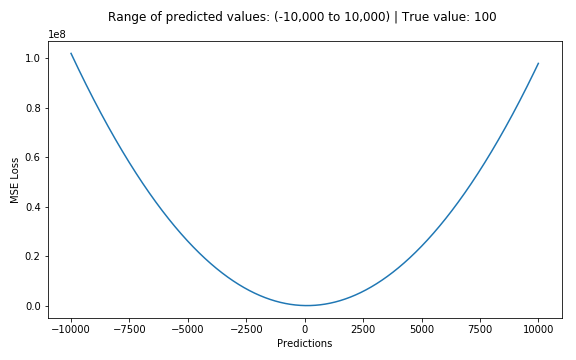

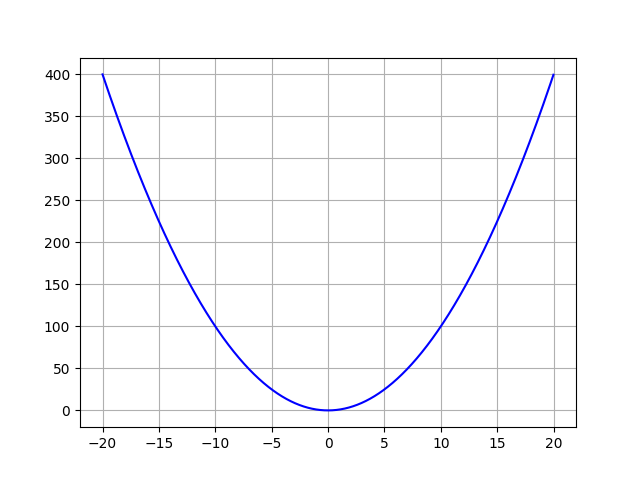

Still different estimators would be optimal under other less common circumstances. If you express your weights as a vector W length N f i e l d s your example features as a matrix X size N e x a m p l e s N f i e l d s and the labels as a vector Y length N e x a m p l e s then you can get an exact solution to minimise loss using the linear least squares equation. Mean square error MSE is the average squared loss per example over the whole dataset.

If you pass batch_size1 you should see them equal. If the models prediction is perfect the Loss is zero. Then the test samples are fed to the model and the number of mistakes zero-one loss the.

Without log loss the artificial intelligence that enables many of our day-to-day activities wouldnt make proper decisions making it. A loss is a number indicating how bad the models prediction was on a single example. Macro - Calculate the metric for each class and take the unweighted average.

Its a method of evaluating how well specific algorithm models the given data. If predictions deviates too much from actual results loss function would cough up a very large number. To calculate MSE sum up all the squared losses for.

If the models prediction is perfect the loss is zero. Machine Learning Python PyTorch NLLLoss is a loss function commonly used in multi-classes classification tasks. The goal of training a model is to find a set of weights and biases that have low loss on average across all examples.

That is Loss is a number indicating how bad the models prediction was on a single example. An Azure Machine Learning experiment created with either. The Azure Machine Learning studio.

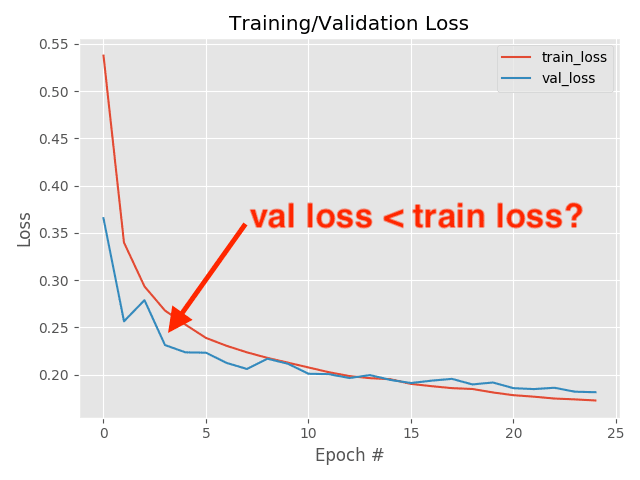

Under typical statistical assumptions the mean or average is the statistic for estimating location that minimizes the expected loss experienced under the squared-error loss function while the median is the estimator that minimizes expected loss experienced under the absolute-difference loss function. The difference between average_loss and loss is that one reduces the SUM over the batch losses while the other reduces the MEAN over the same losses.

Introduction To Loss Functions

What Does It Mean When Train And Validation Loss Diverge From Epoch 1 Stack Overflow

Common Loss Functions In Machine Learning By Ravindra Parmar Towards Data Science

Introduction To Loss Functions

Introduction To Loss Functions

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

Loss Function Loss Function In Machine Learning

Understanding Loss Functions Hinge Loss By Kunal Chowdhury Analytics Vidhya Medium

What Does It Mean When Train And Validation Loss Diverge From Epoch 1 Stack Overflow

Introduction To Loss Functions

How To Interpret Loss And Accuracy For A Machine Learning Model Stack Overflow

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

5 Regression Loss Functions All Machine Learners Should Know By Prince Grover Heartbeat

Understanding Learning Rates And How It Improves Performance In Deep Learning By Hafidz Zulkifli Towards Data Science

Descending Into Ml Training And Loss Machine Learning Crash Course

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

Understanding The 3 Most Common Loss Functions For Machine Learning Regression By George Seif Towards Data Science

Post a Comment for "Machine Learning Average Loss"