Bagging Machine Learning Explained

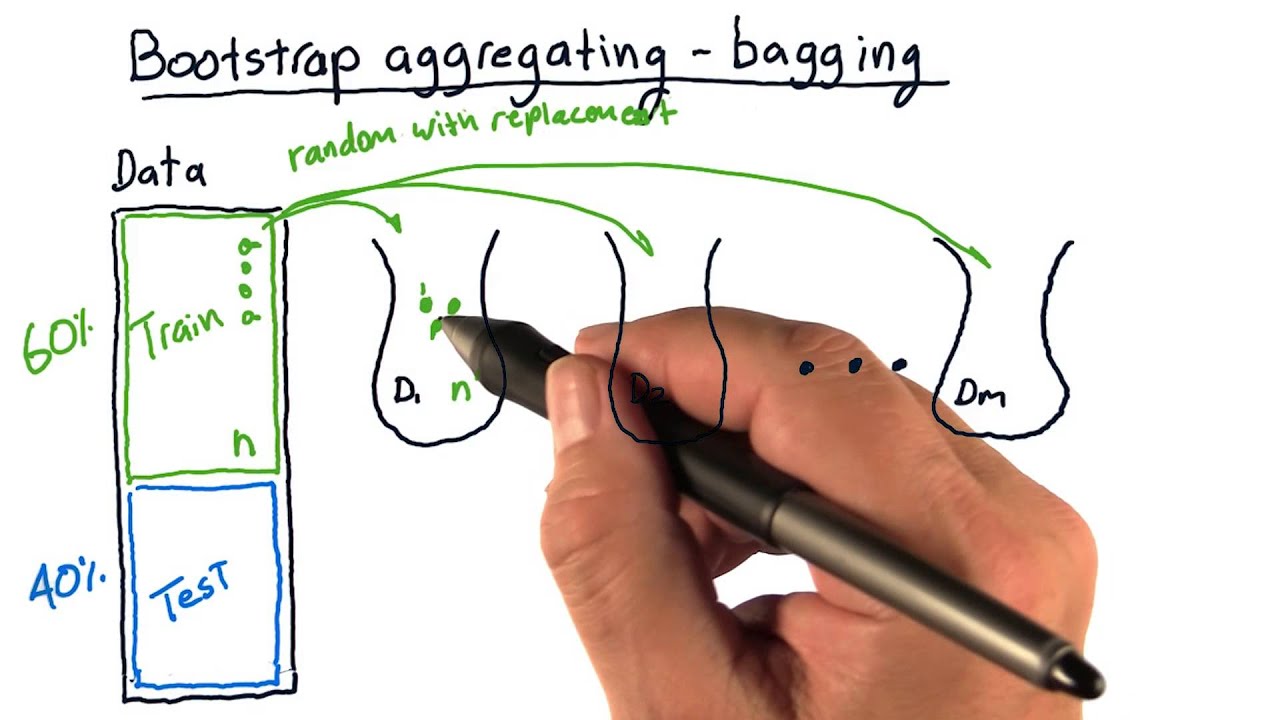

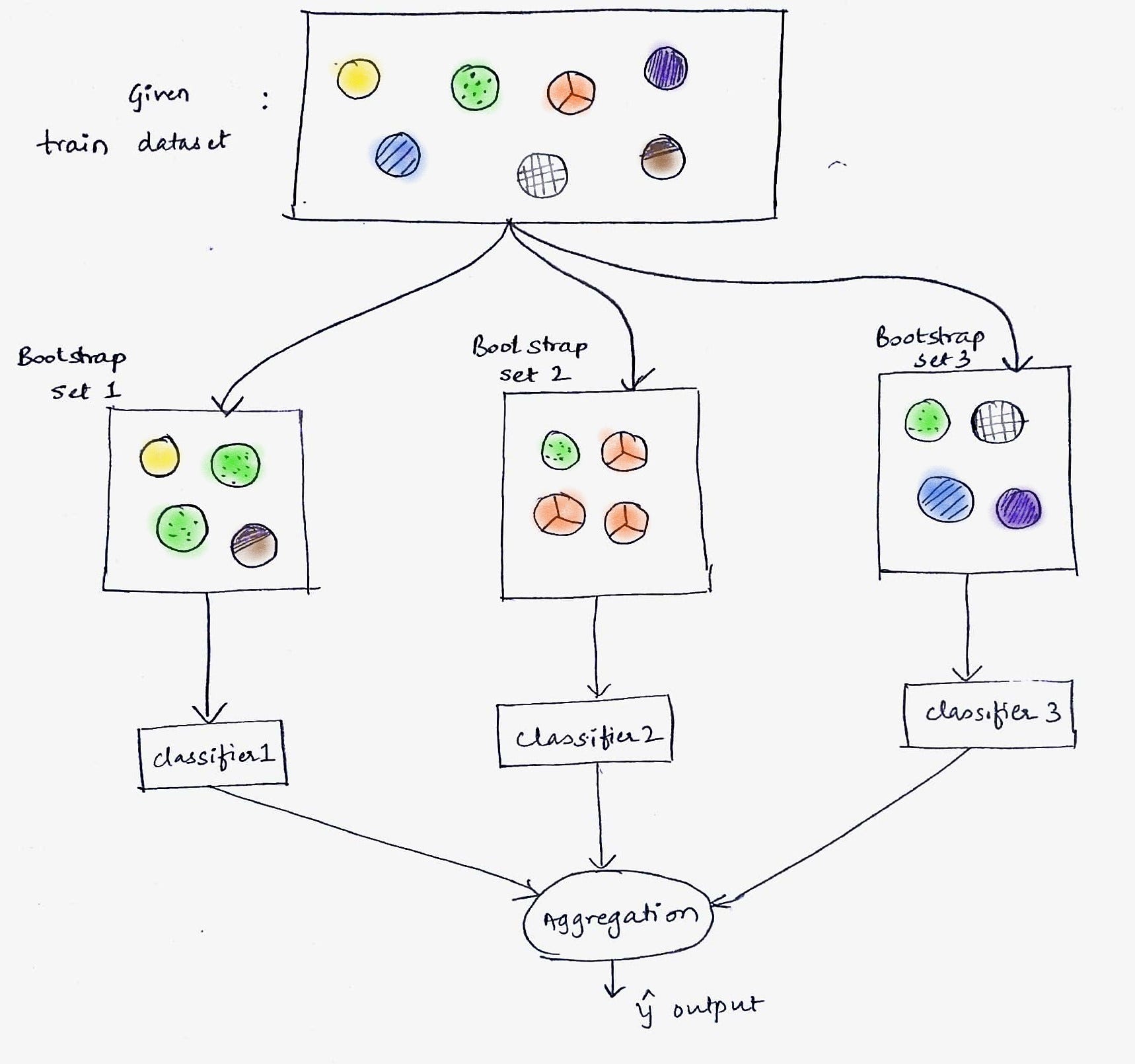

Decision trees have a lot of similarity and co-relation in their predictions. Bootstrap Aggregation famously knows as bagging is a powerful and simple ensemble method.

Ensemble Methods Bagging Boosting By Sai Nikhilesh Kasturi The Startup Medium

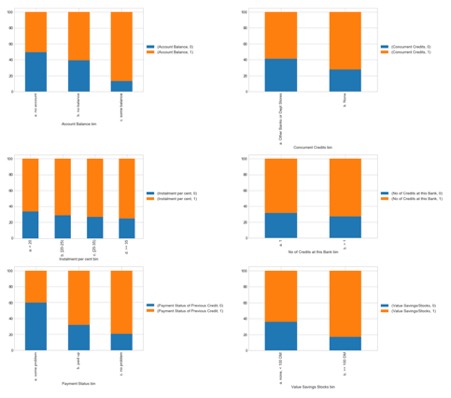

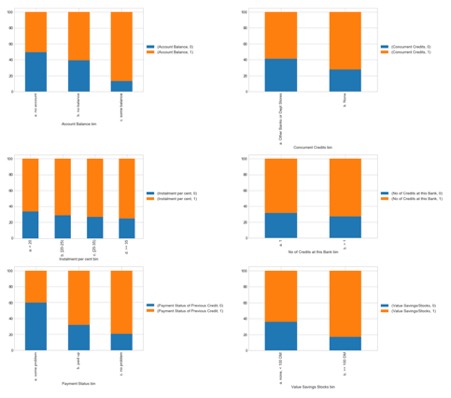

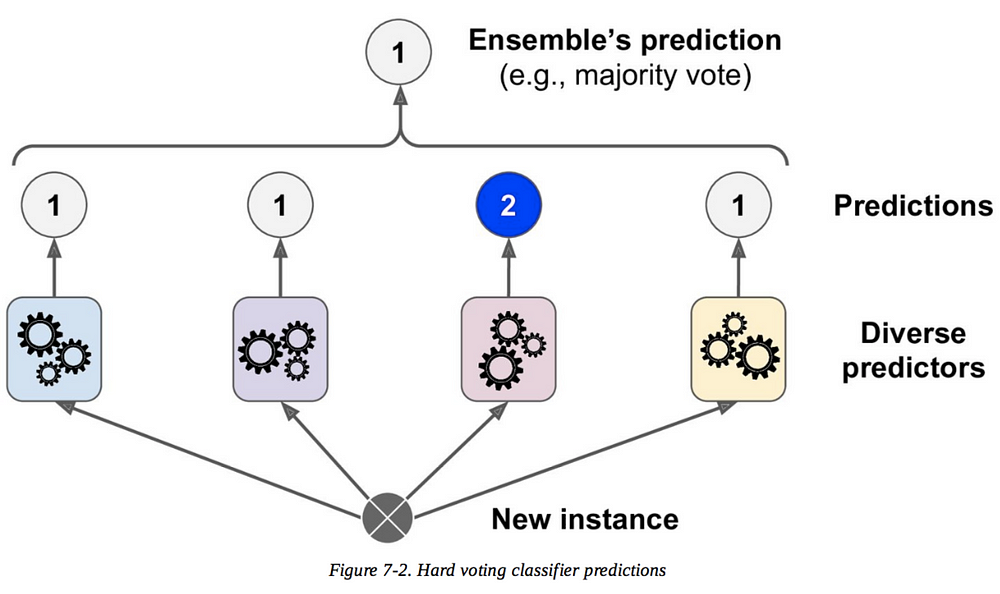

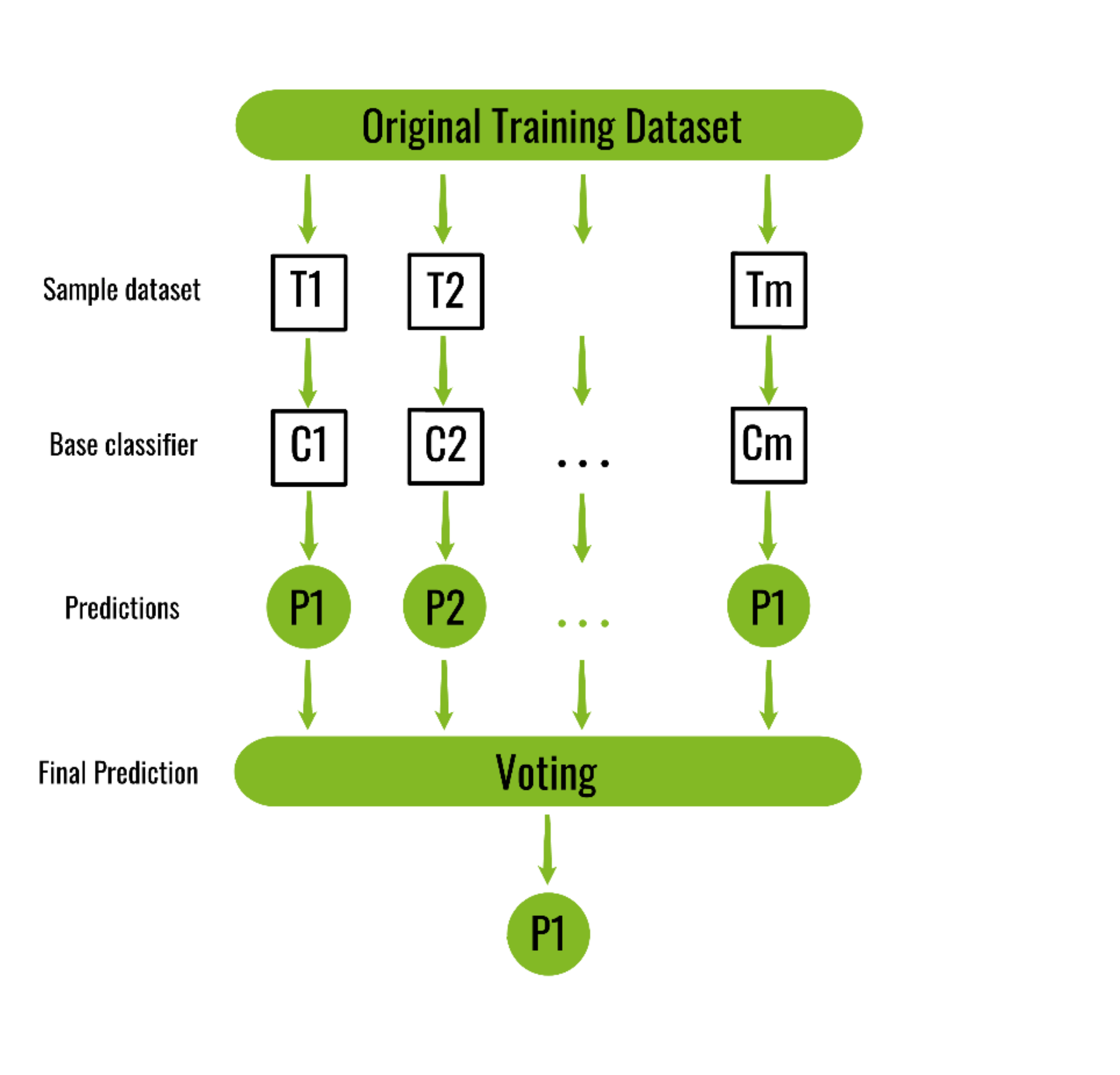

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual predictions either by voting or by averaging to form a final prediction.

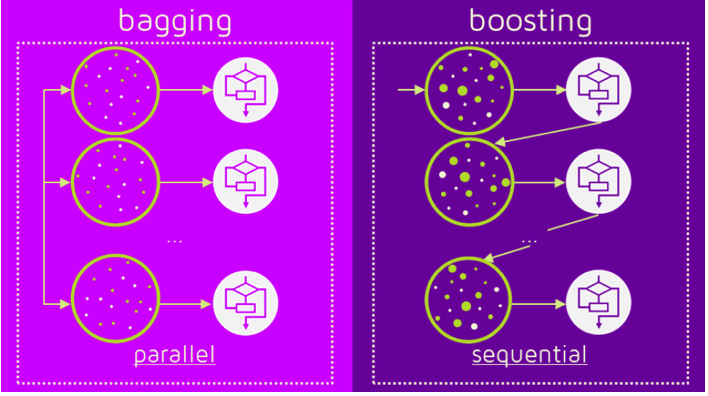

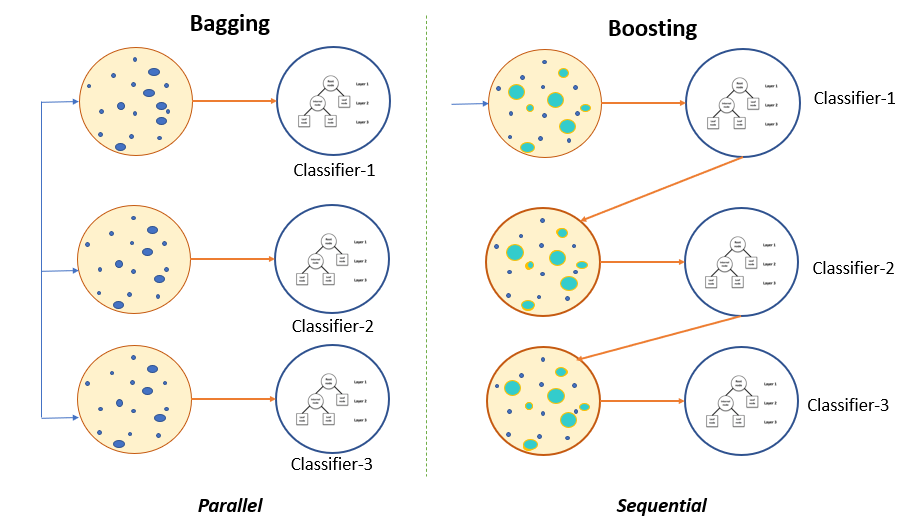

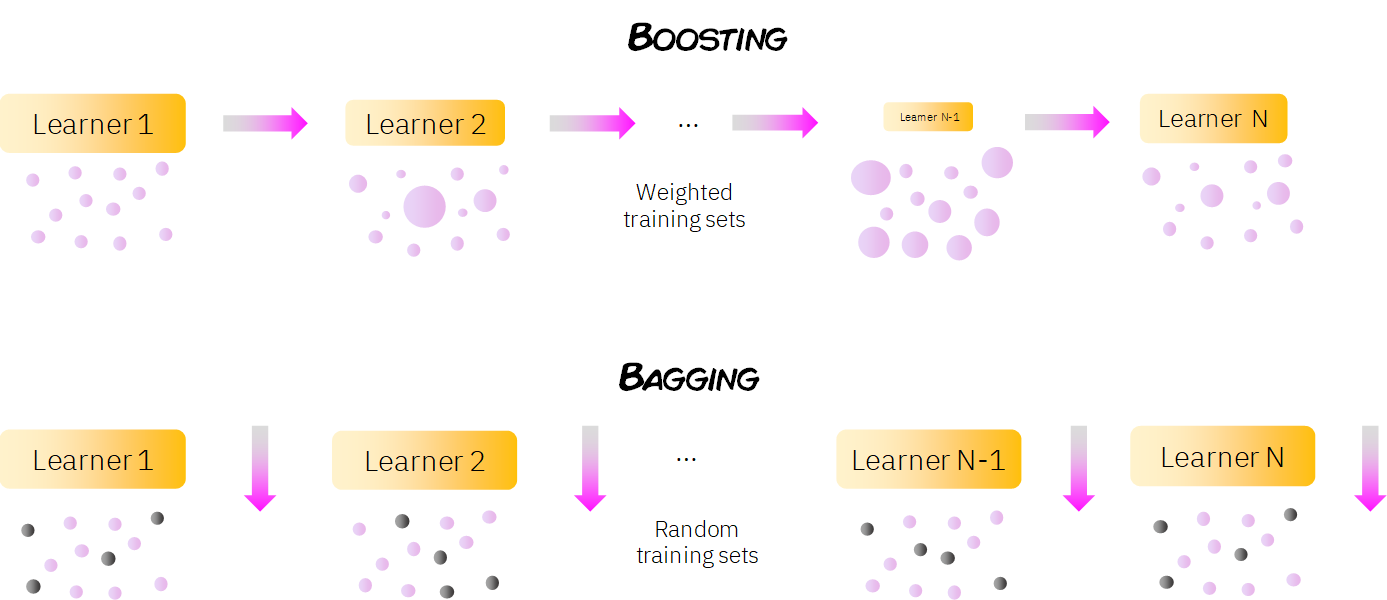

Bagging machine learning explained. In Boosting new sub-datasets are drawn randomly with replacement from the weightedupdated dataset. The Main Goal of Bagging is to decrease variance not bias. Understanding the Ensemble method Bagging and Boosting Ensemble Methods.

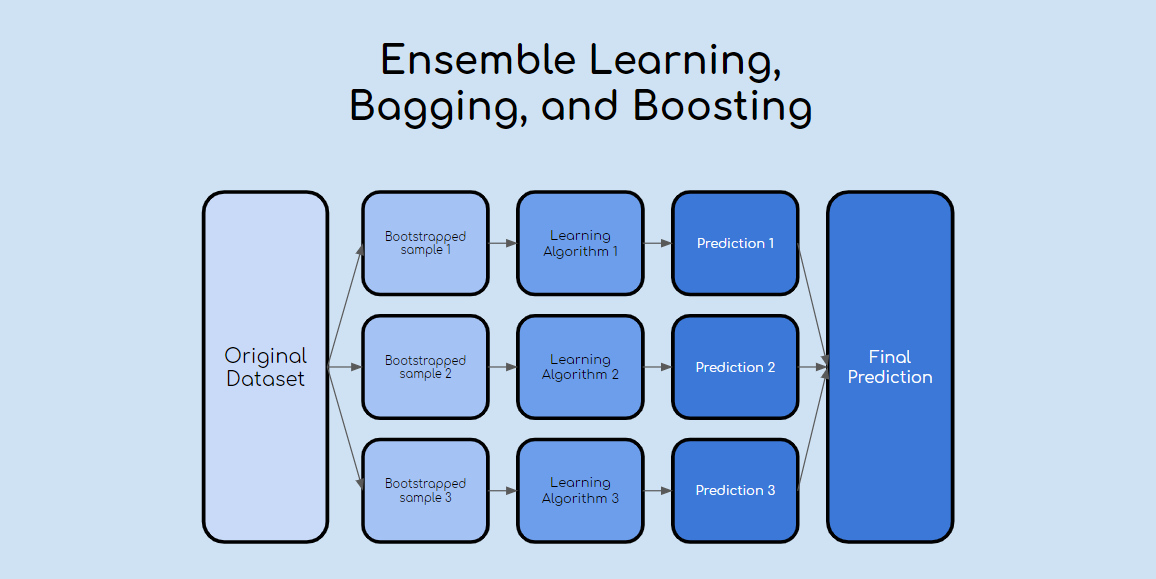

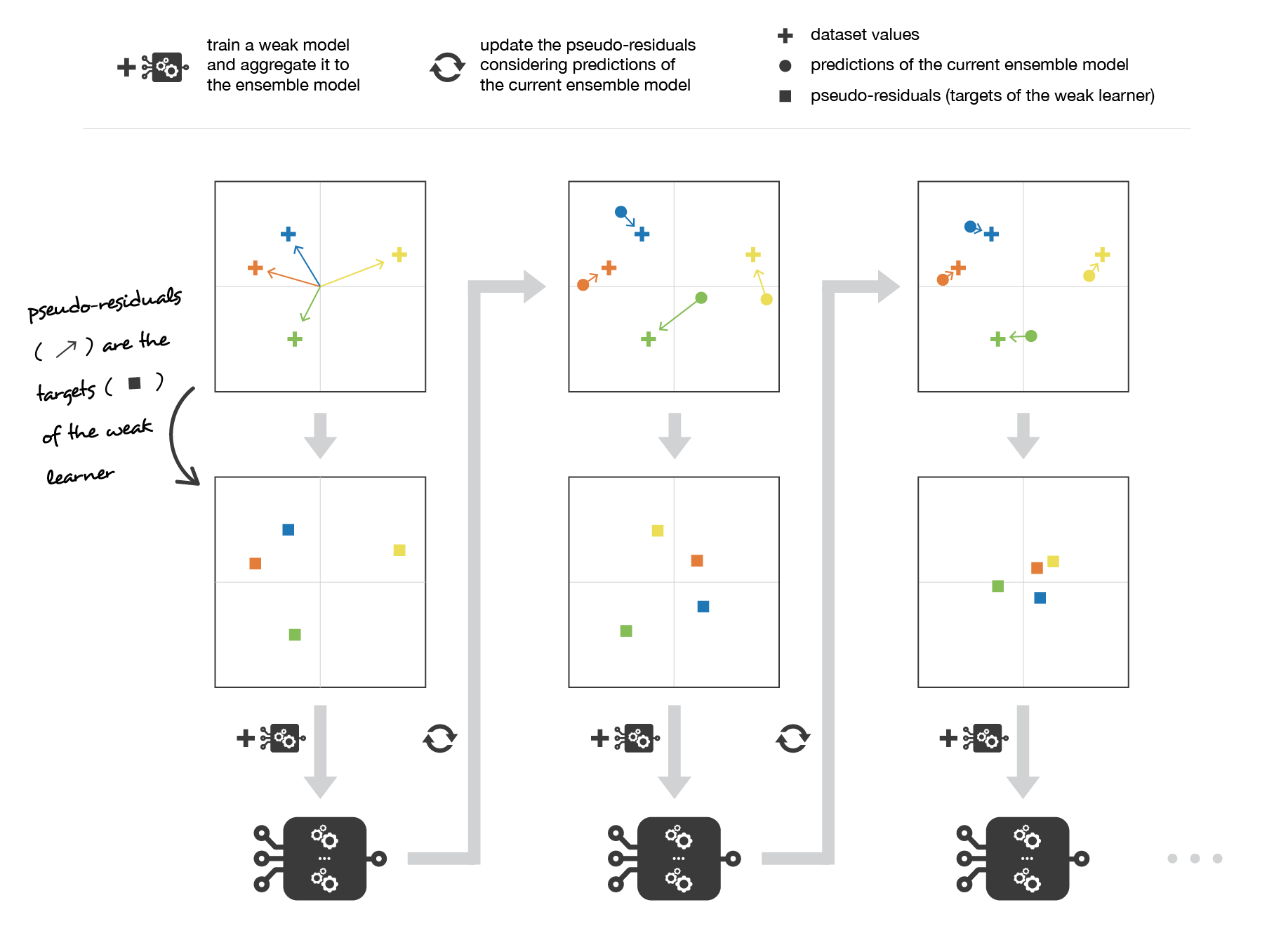

Sampling is done with a replacement on the original data set and new datasets are formed. Now each collection of subset data is used to prepare their decision trees thus we end up with an ensemble of various models. It is done building a model by using weak models in series.

The Main Goal of Boosting is to decrease bias not variance. In Bagging multiple training data-subsets are drawn randomly with replacement from the original dataset. It helps in reducing variance ie.

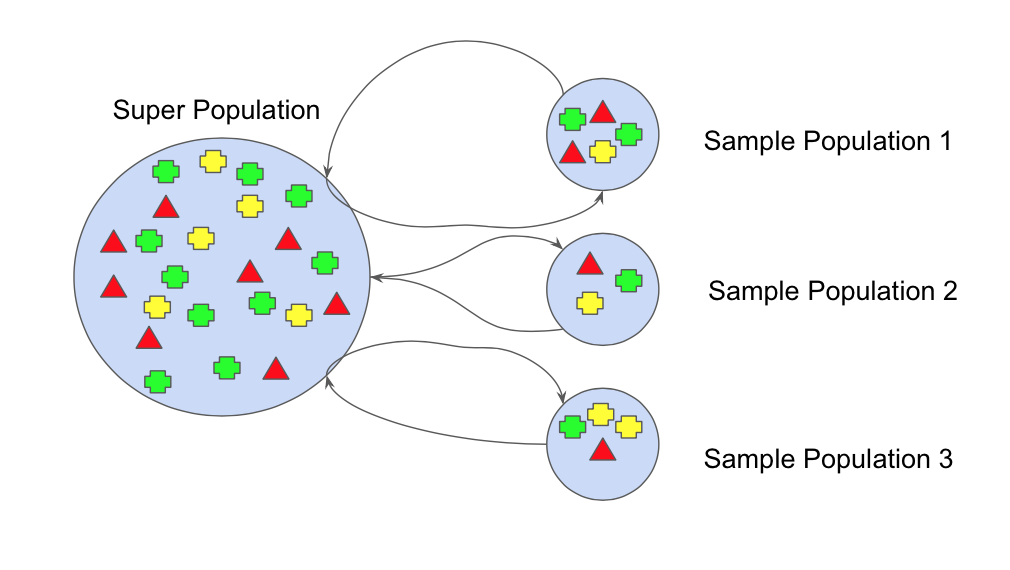

Here the concept is to create a few subsets of data from the training sample which is chosen randomly with replacement. On each of these smaller datasets a classifier is built usually the same classifier is. It provides stability and increases the machine learning algorithms accuracy that is used in statistical classification and regression.

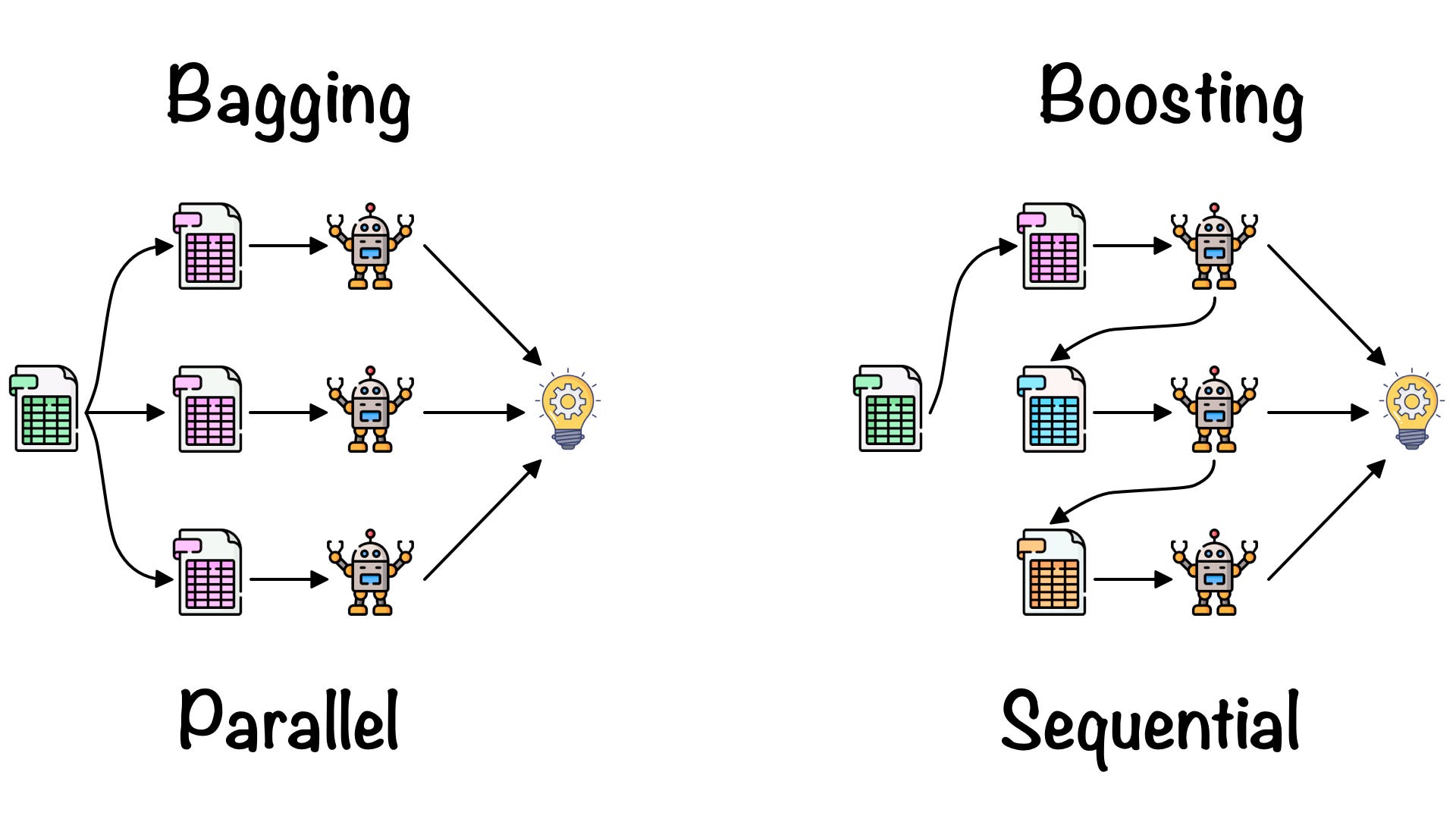

Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of. Bagging is a powerful ensemble method that helps to reduce variance and by extension prevent overfitting. Ensemble learning is a machine learning technique in which multiple weak learners are trained to solve the same problem and after training the learners they are combined to get more accurate and efficient results.

Boosting is an ensemble modeling technique which attempts to build a strong classifier from the number of weak classifiers. Artificial Intelligence and the Future of Work. The general principle of an ensemble method in Machine Learning to combine the predictions of several.

Bagging methods ensure that the overfitting of the model is reduced and it. Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees. Bagging is a powerful method to improve the performance of simple models and reduce overfitting of more complex models.

Bagging Vs Boosting. Ensemble methods improve model precision by using a group of models which when combined outperform individual models when used separately. The principle is very easy to understand instead of fitting the model on one sample of the population several models are fitted on different samples with replacement of the population.

Bagging generates additional data for training from the dataset. Boosting in Machine Learning Boosting and AdaBoost. The biggest advantage of bagging is that multiple weak learners can work better than a single strong learner.

How machine learning works. Then these models are aggregated by using their average weighted average or a voting. Bagging Bagging is used when our objective is to reduce the variance of a decision tree.

Main Steps involved in bagging are. What are ensemble methods. Firstly a model is built from the training data.

Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model. Bagging is a parallel method that fits different considered learners independently from each other making it possible to train them simultaneously. Machine learning programs can be trained to examine medical images or other information and look for certain markers of illness like a tool that can predict cancer risk based on a mammogram.

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Difference Between Bagging And Random Forest Difference Between

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

What Is The Difference Between Bagging And Boosting Quantdare

Bagging Unraveled In Our Previous Post On Decision Trees By Sanju Prabhath Reddy Data Science Group Iitr Medium

What Is The Difference Between Bagging And Random Forest If Only One Explanatory Variable Is Used Cross Validated

A Primer To Ensemble Learning Bagging And Boosting

Ensemble Learning 5 Main Approaches Kdnuggets

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Bootstrap Aggregating Bagging Youtube

What Is Bagging In Machine Learning Quora

Machine Learning Bootstrapping Quantum Computing

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Ml Bagging Classifier Geeksforgeeks

Boosting In Machine Learning Explained An Awesome Introduction

Difference Between Bagging And Random Forest Difference Between

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

Post a Comment for "Bagging Machine Learning Explained"