Machine Learning Cost Function Vs Loss Function

To know about it clearly wait for sometime. A loss function is used during the learning process.

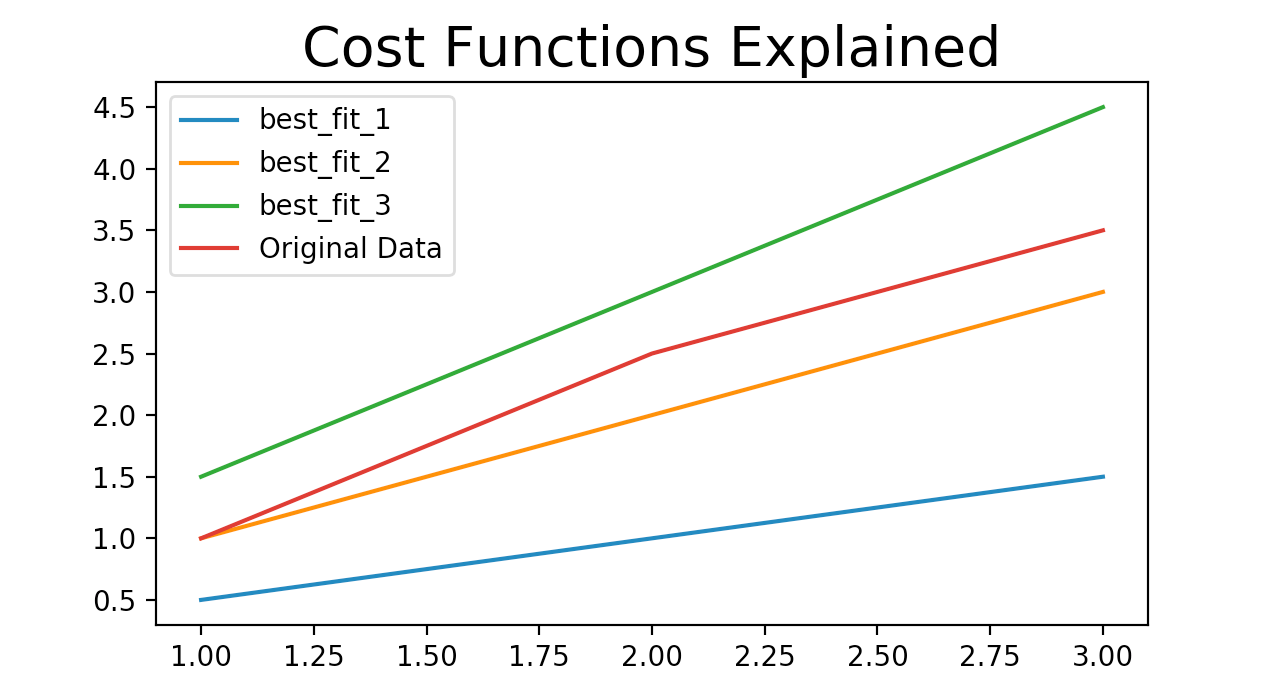

Coding Deep Learning For Beginners Linear Regression Part 2 Cost Function By Kamil Krzyk Towards Data Science

We will go over various loss f.

Machine learning cost function vs loss function. A metric is used to evaluate your model. In a ML context you might want to minimize the movement by adjusting the parameters. Assuming you train three different models each using different algorithms and loss function to solve the same image classification task.

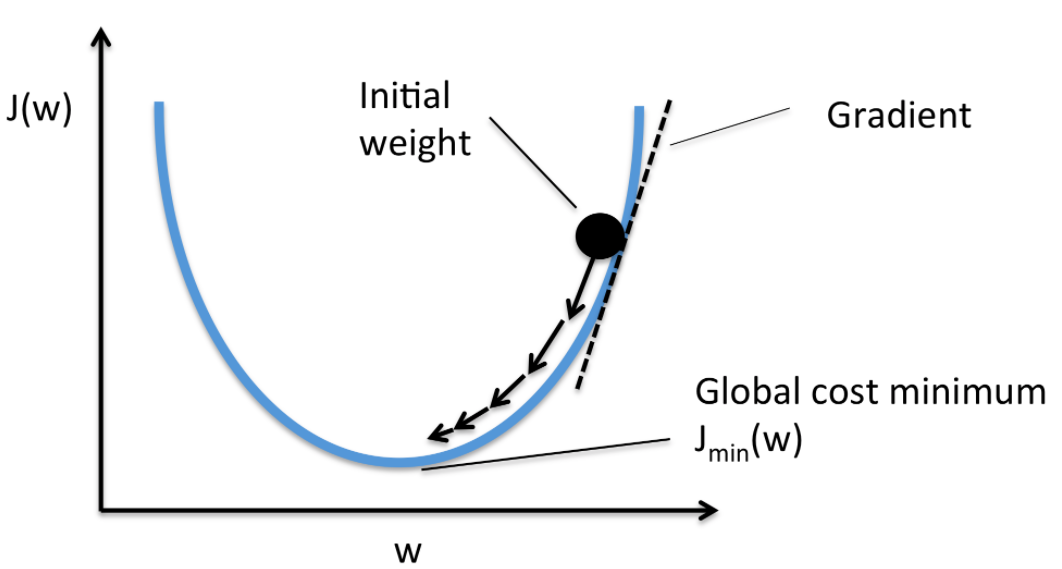

If Jθ ever increases then you probably need to. Make a plot with number of iterations on the x-axis. We seek a function f X for predicting Y given values of the input X the loss function LY fX is a function for penalizing the errors in.

Following content will help you to know. Which loss function should you use to train your machine learning model. This might be the same function but is not necessarily the case.

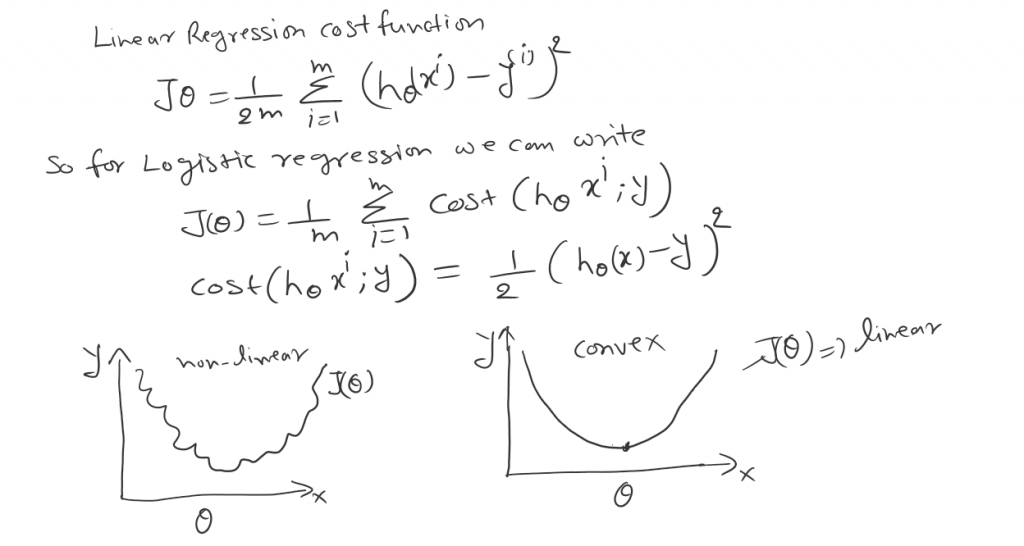

So for a single training cycle loss is calculated numerous times but the cost function is only calculated once. A general formulation that combines the objective and loss function. The cost function is calculated as an average of loss functions.

One of the recommendations in the Coursera Machine Learning course when working with gradient descent based algorithms is. In short we can say that the loss function is a part of the cost function. Penalty between prediction and label which is also equivalent to the regularization term.

You can learn more about cost and loss function by enrolling in the Machine Learning Course. According to the Hastie et als textbook Elements of Statistical Learning by p37. The loss function is a value which is calculated at every instance.

During this post will explain about machine learning ML concepts ie. The cost function is calculated as an average of loss functions. The missing link is the complete data.

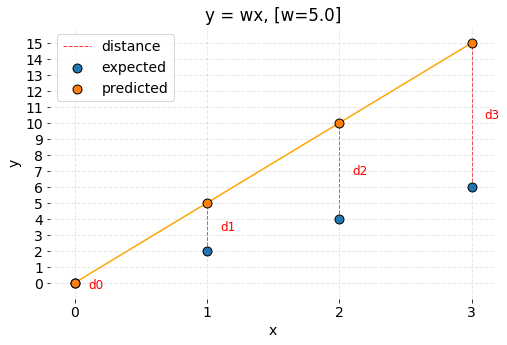

Choosing the best model based on loss error would not always work. Gradient Descent and Cost functionIn logistic regression for binary classification we can consider an example for a simple image classifier that takes images as input and predict the probability of them belonging to a specific category. So for a single training cycle loss is calculated numerous times but the cost function is only cal.

Were going to discuss this topic in Chapter 9 Neural Networks for Machine Learning. Machine Learning models require a high level of accuracy to work in the actual world. The energy of a system in physics might be the movement inside this system.

It will go through several loss functions and their applications in the domain of machinedeep learning. Example is the hinge loss function in SVM. Now plot the cost function Jθ over the number of iterations of gradient descent.

How about mean squared error. Broadly loss functions can be classified into. Then one way to achieve this is to use the energy function as a loss function and minimize this function directly.

While the loss function is for only one training example the cost function accounts for entire data set. A machine learning parameter that is used for correctly judging the model cost functions are important to understand to know how well the model has estimated the relationship between your input. However for now its useful to consider a loss function as an intermediate between our training process and a pure mathematical optimization.

This is where the cost function comes into the picture. If all of those se. The loss function is a value that is calculated at every instance.

Loss or a cost function is an important concept we need to understand if you want to grasp how a neural network trains itself. The Cost function J is a function of the fitting parameters theta. A metric is used after the learning process.

A loss function is used to train your model. But how do you calculate how wrong or right your model is.

Understanding And Calculating The Cost Function For Linear Regression By Lachlan Miller Medium

Understanding Learning Rate In Machine Learning

Machine Learning Fundamentals I Cost Functions And Gradient Descent By Conor Mc Towards Data Science

Coding Deep Learning For Beginners Linear Regression Part 2 Cost Function By Kamil Krzyk Towards Data Science

Coding Deep Learning For Beginners Linear Regression Part 2 Cost Function By Kamil Krzyk Towards Data Science

Cost Function Intuition I Data Science Intuition Data

Demystifying Optimizations For Machine Learning Exploratory Data Analysis Machine Learning Deep Learning Machine Learning

Cost Function In Logistic Regression By Brijesh Singh Analytics Vidhya Medium

Machine Learning Week 1 Cost Function Gradient Descent And Univariate Linear Regression By Lachlan Miller Medium

Introduction To Loss Functions

Machine Learning Fundamentals I Cost Functions And Gradient Descent By Conor Mc Towards Data Science

Machine Learning Fundamentals I Cost Functions And Gradient Descent By Conor Mc Towards Data Science

Machine Learning Fundamentals I Cost Functions And Gradient Descent By Conor Mc Towards Data Science

Machine Learning Week 1 Cost Function Gradient Descent And Univariate Linear Regression By Lachlan Miller Medium

Pin Von Markus Meierer Auf Data Science

Understanding Learning Rate In Machine Learning

Understanding Learning Rate In Machine Learning

Minimizing The Cost Function Gradient Descent By Xuankhanh Nguyen Towards Data Science

Loss Function Loss Function In Machine Learning

Post a Comment for "Machine Learning Cost Function Vs Loss Function"