Machine Learning Feature Engineering Variable Selection

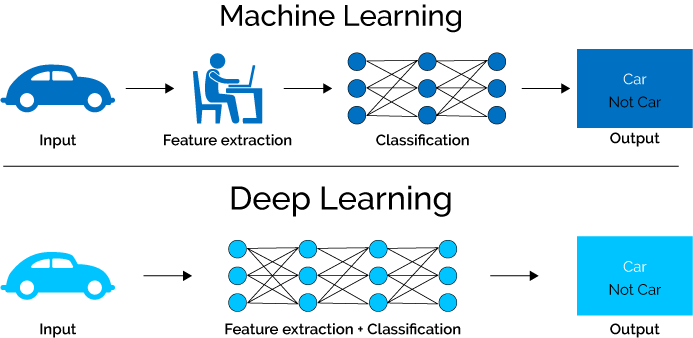

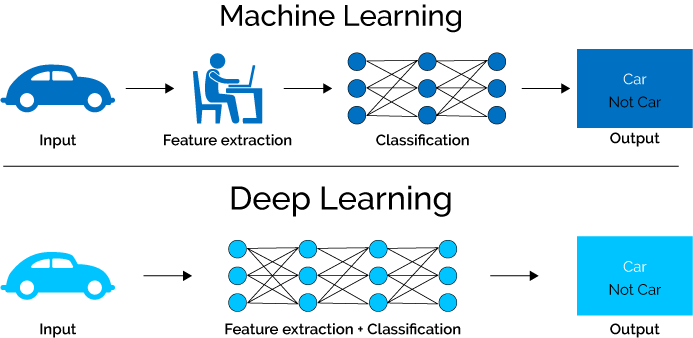

The performance of models depends in the following. One of the advantages of deep learning is that it completely automates what used to be the most crucial step in a machine-learning workflow.

Feature Engineering Towards Data Science

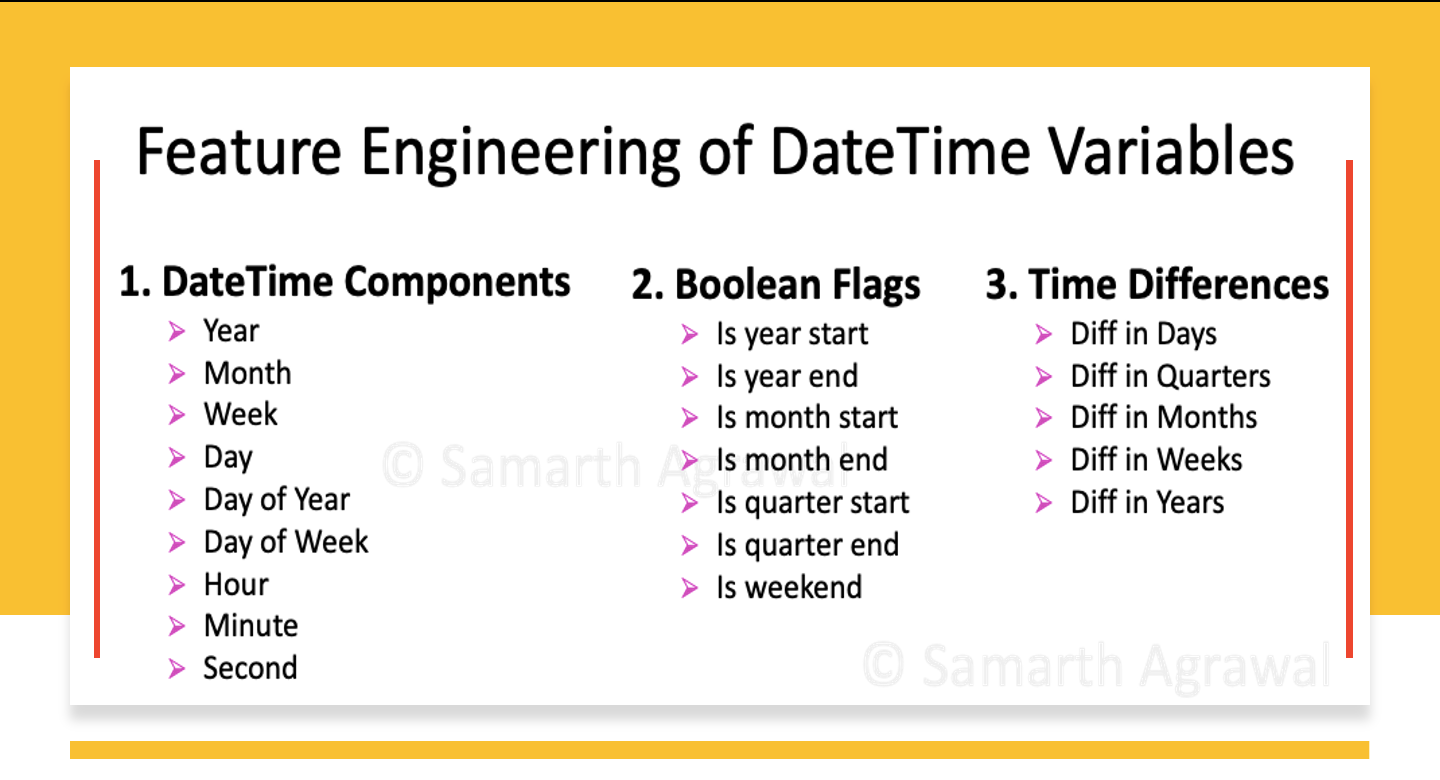

Samarth Agrawal is a Data Scientist at Toyota and a Data Science practitioner and communicator.

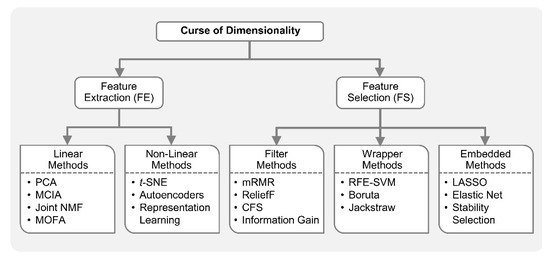

Machine learning feature engineering variable selection. How to apply modern Machine Learning on Volume Spread Analysis VSA. Feature Selection is basically the methods applied over the given dataset to identify the potential or important features from the dataset which are having high impact on dependent variable. Automated feature engineering aims to help the data scientist by automatically creating many candidate features out of a dataset from which the best can be selected and used for training.

We will use an example dataset to show the basics stay tuned for future. Feature engineering follows next and we begin that process by evaluating the baseline performance of the data at hand. Feature engineering refers to creating a new feature when we could have used the raw feature as well whereas feature extraction is creating new features when we cant use raw data in the analysis such as converting image to RGB values.

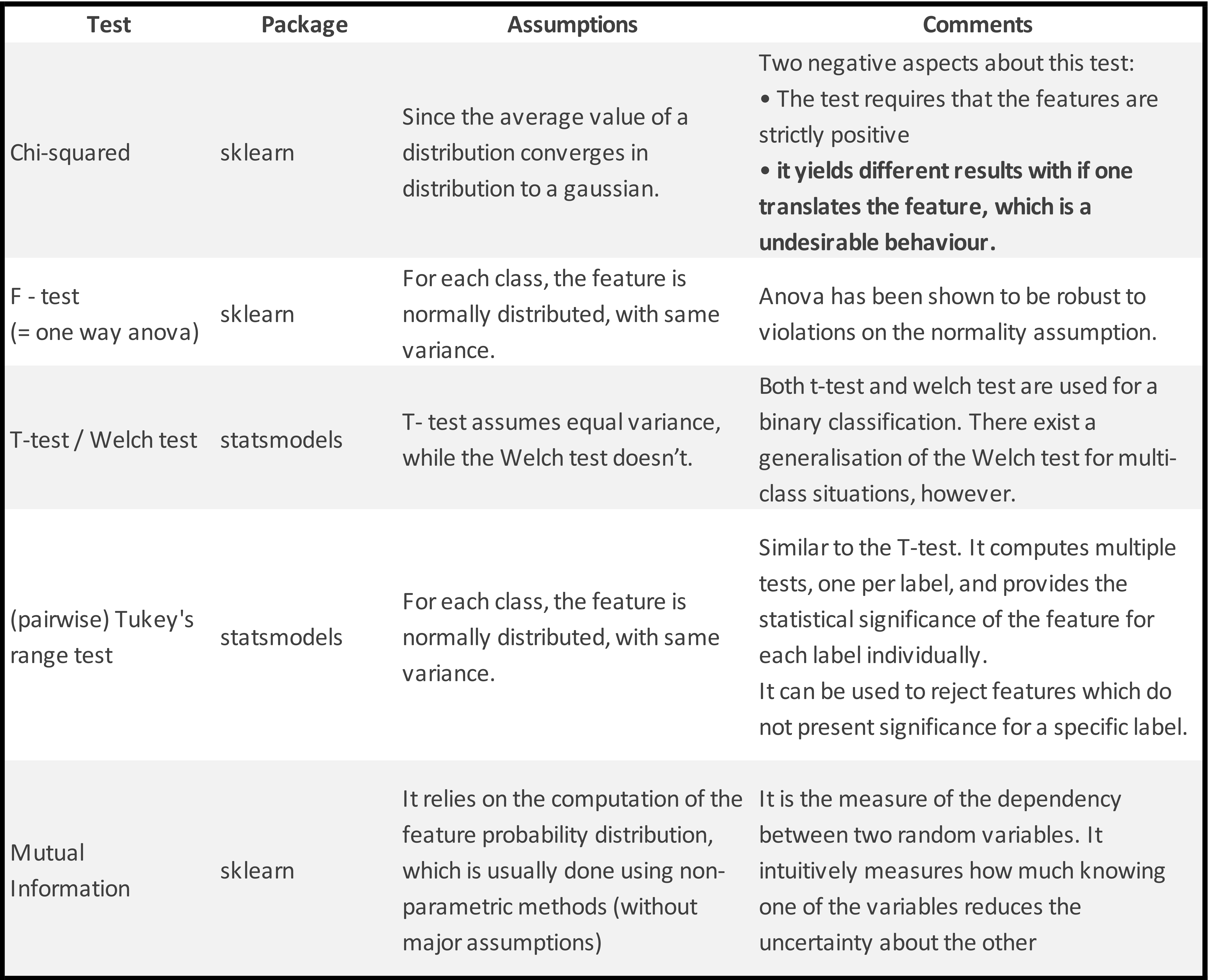

Quick Feature Engineering with Dates Using fastai. Feature selection is an important step in machine learning model building process. In this article we will walk through an example of using automated feature engineering with the featuretools Python library.

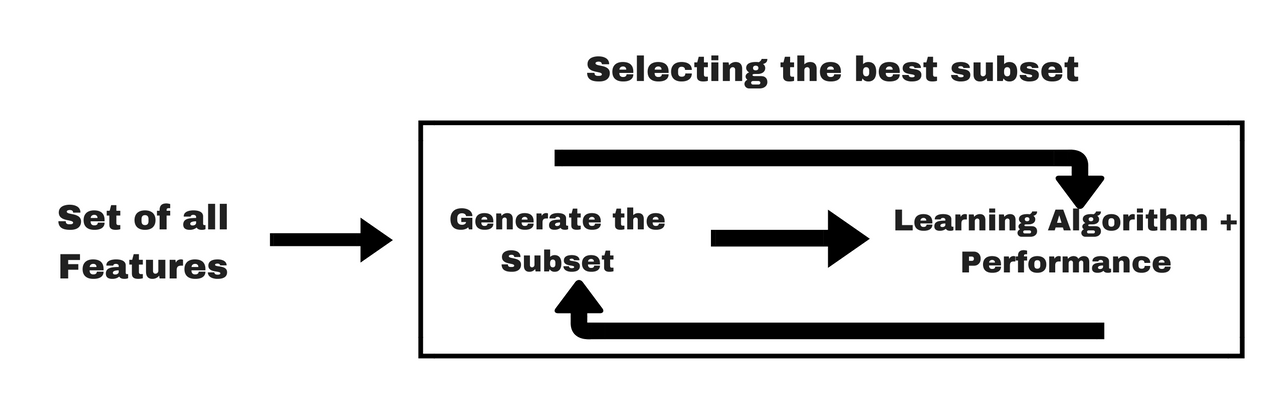

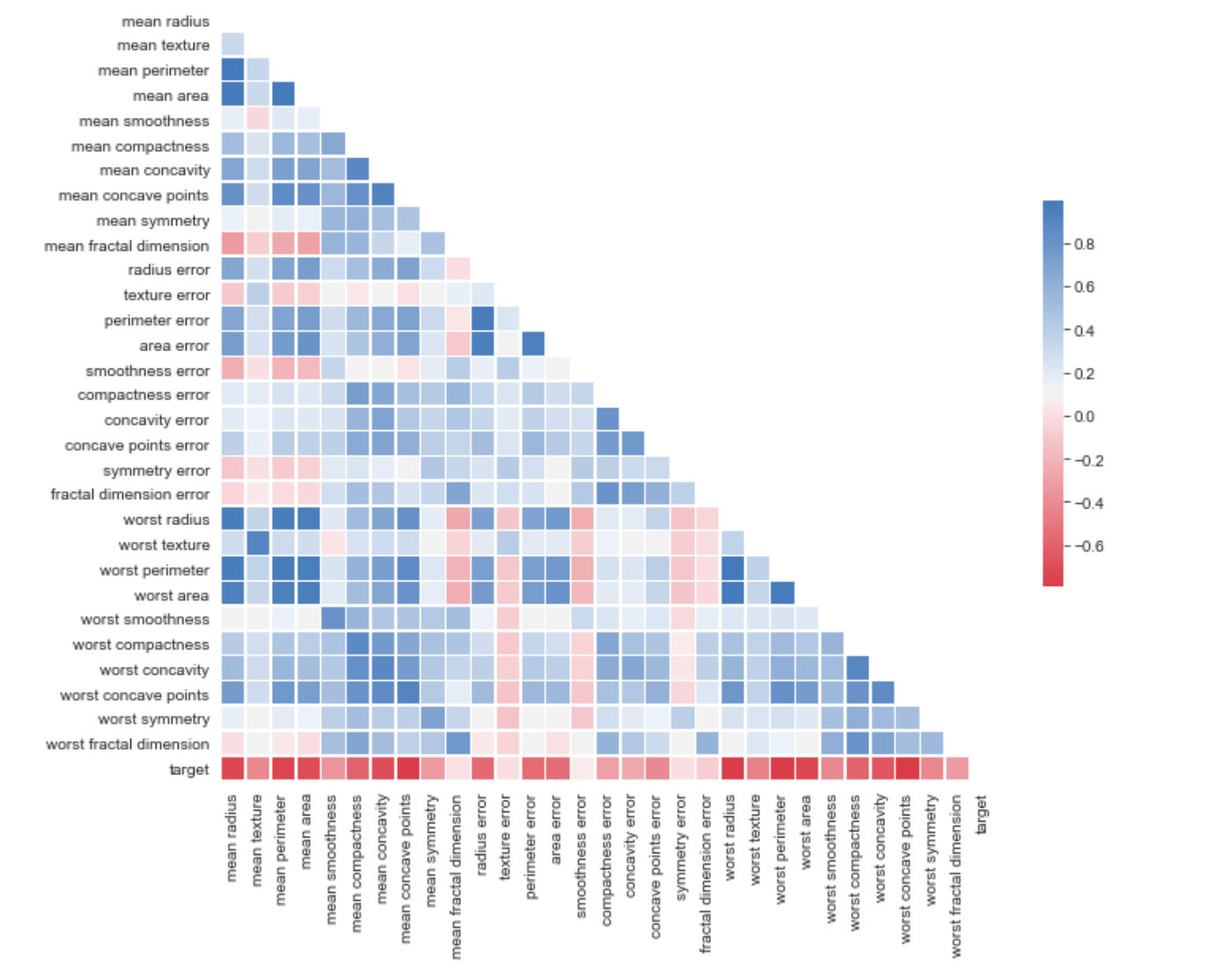

Allowing us to see both distributions of single variables and. We then iteratively construct features and continuously evaluate model performance and compare it with the baseline performance through a process called feature selection until we are satisfied with the results. The best feature to add in every iteration is determined by some criteria which could simply be attempting to find the lowest cross-validation error the Louis P value or any of the other tests are measures of accuracy.

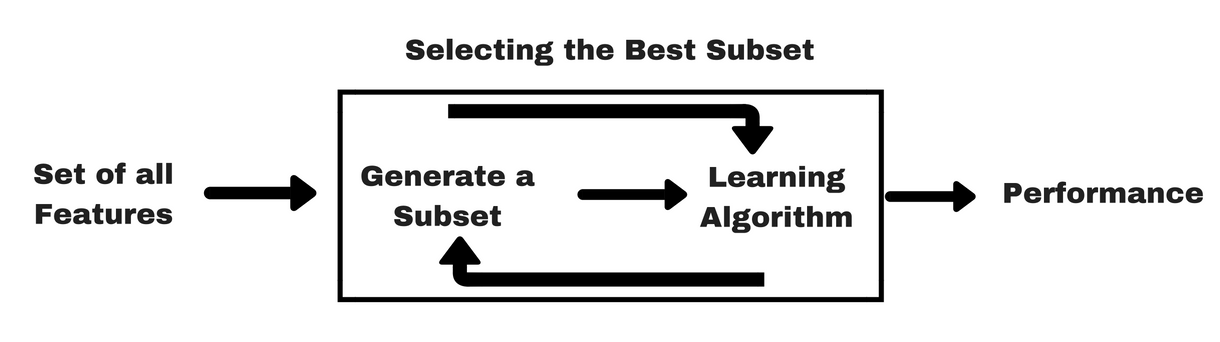

Forward selection is one of the wrapper techniques where we start with no features and iteratively keep adding features that best improve the performance of the machine learning model in each step. Feature Engineering Feature Selection. When done well feature selection will.

It does this by reducing the number of variables or noisy features. Therefore you have to extract the features from the raw dataset you have collected before training your data in machine learning algorithms. Feature engineering refers to a process of selecting and transforming variablesfeatures in your dataset when creating a predictive model using machine learning.

Both refer to creating new features from the existing features. Both Feature engineering and feature extraction are similar. The process of selecting the key subset of features to reduce the dimensionality of the training problem.

That is improve the ML models ability to predict outcomes for previously unseen data. Feature selection can help to address this in an effective way by removing any irrelevant noisy features. Normally feature engineering is applied first to generate additional features and then feature selection is done to eliminate irrelevant redundant or highly correlated features.

Feature Engineering of DateTime Variables Explore and run machine learning code with Kaggle Notebooks Using data from loan_data Bio.

Genes Free Full Text Machine Learning And Integrative Analysis Of Biomedical Big Data Html

Feature Engineering For Machine Learning Data Science Primer

Feature Selection In Machine Learning Feature Selection Techniques With Examples Simplilearn Youtube

Feature Selection Methods Machine Learning

Getting Data Ready For Modelling Feature Engineering Feature Selection Dimension Reduction Part Two By Akash Desarda Towards Data Science

What Is Feature Engineering Displayr

What Are Feature Variables In Machine Learning Datarobot Ai Wiki

Cnn Application On Structured Data Automated Feature Extraction By Sourish Dey Towards Data Science

Feature Selection Techniques For Classification And Python Tips For Their Application By Gabriel Azevedo Towards Data Science

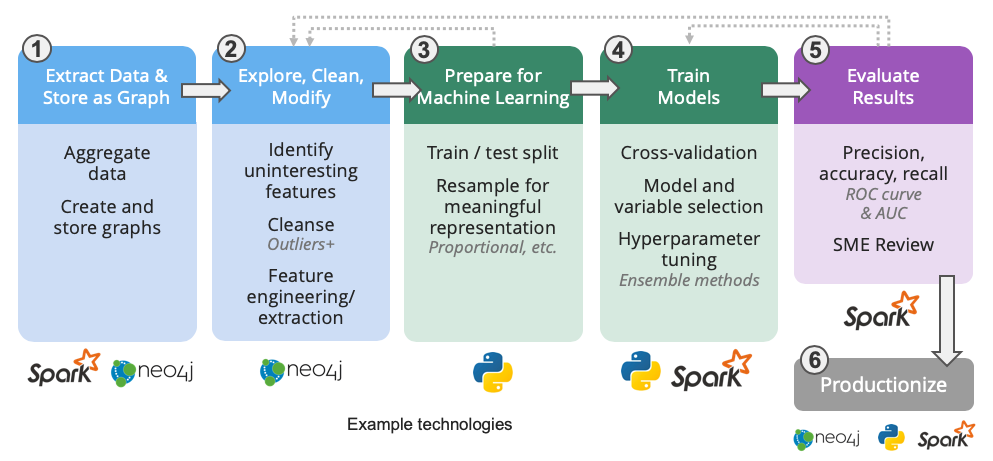

How Graph Algorithms Improve Machine Learning O Reilly

Getting Data Ready For Modelling Feature Engineering Feature Selection Dimension Reduction Part Two By Akash Desarda Towards Data Science

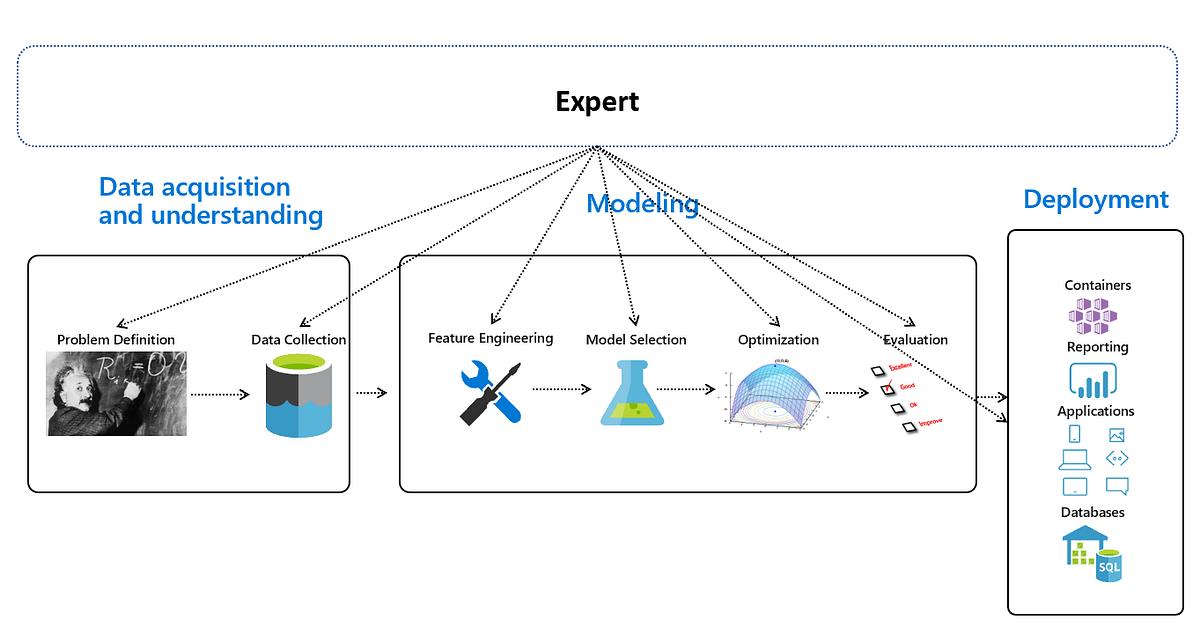

Automated Machine Learning An Overview By Think Gradient Thinkgradient Medium

Getting Data Ready For Modelling Feature Engineering Feature Selection Dimension Reduction Part Two By Akash Desarda Towards Data Science

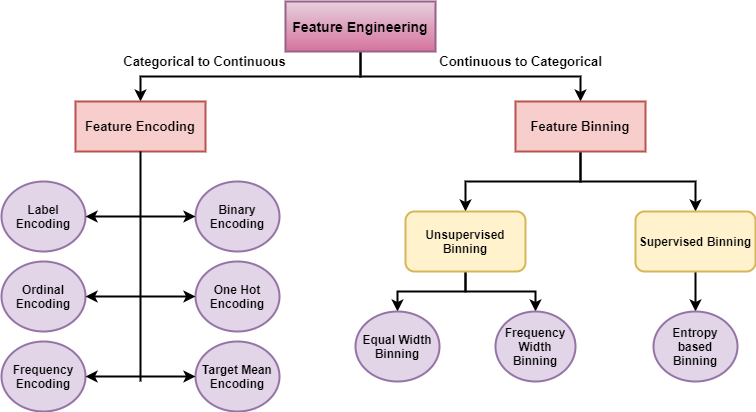

Feature Engineering Deep Dive Into Encoding And Binning Techniques By Satyam Kumar Towards Data Science

Feature Selection Methods Machine Learning

The Art Of Finding The Best Features For Machine Learning By Rebecca Vickery Towards Data Science

Feature Engineering For Machine Learning Data Science Primer

Feature Engineering For Machine Learning Data Science Primer

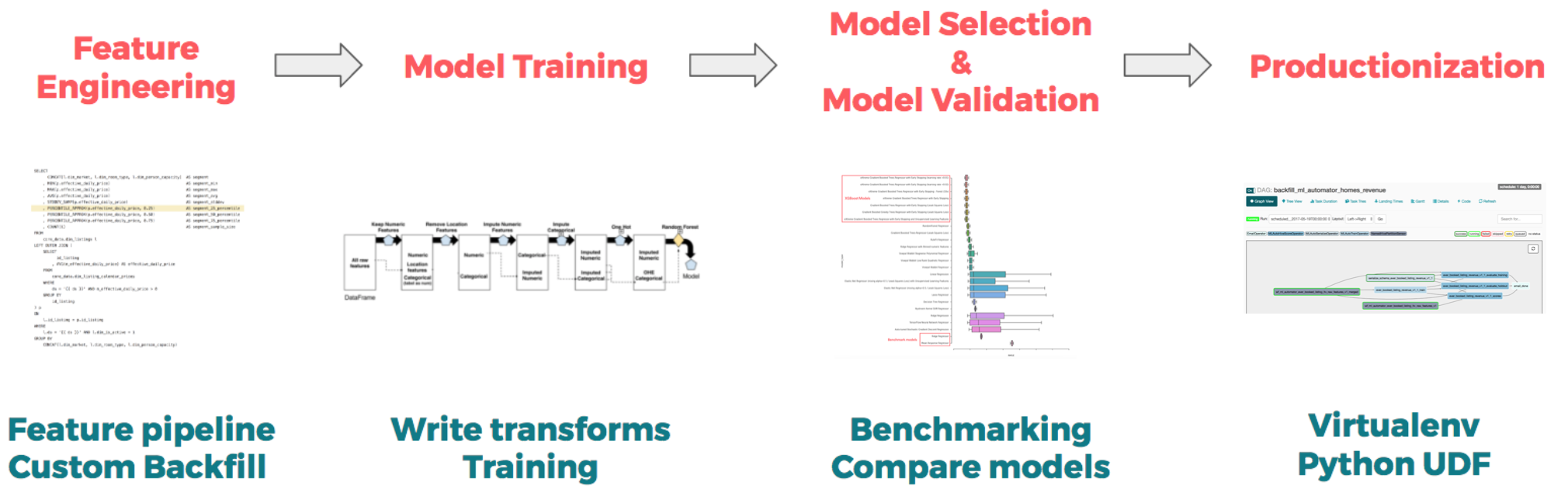

Using Machine Learning To Predict Value Of Homes On Airbnb By Robert Chang Airbnb Engineering Data Science Medium

Post a Comment for "Machine Learning Feature Engineering Variable Selection"