Machine Learning Loss Not Decreasing

Standardizing and Normalizing the data. The training loss should now decrease but the test loss may increase.

Width Machine Learning Data Science Glossary Data Science Machine Learning Machine Learning Methods

Dealing with such a Model.

Machine learning loss not decreasing. Decrease in the loss as the metric on the training step. This is the DALL-E component that generates 256x256 pixel images from a 32x32 grid of numbers each with 8192 possible values and vice-versa. 5 minutes Learning Objectives.

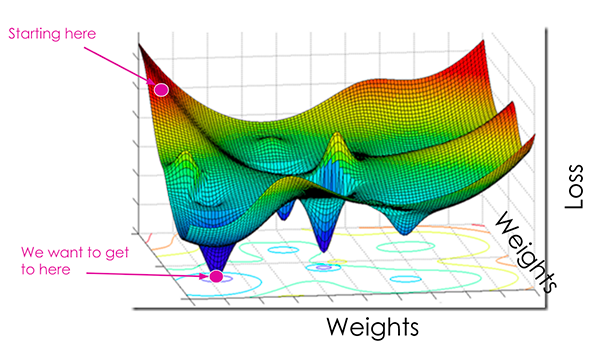

Obtain higher validationtesting accuracy. The model is updating weights but loss is constant. To test this hypothesis you can set learning rate to small value and all initializers to generate small values too - then network may not go to this plateau suddenly but goes to global minima instead.

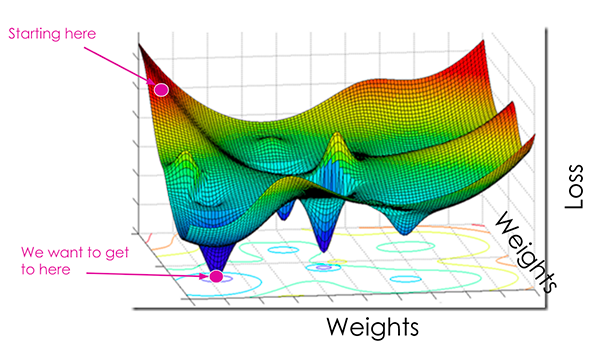

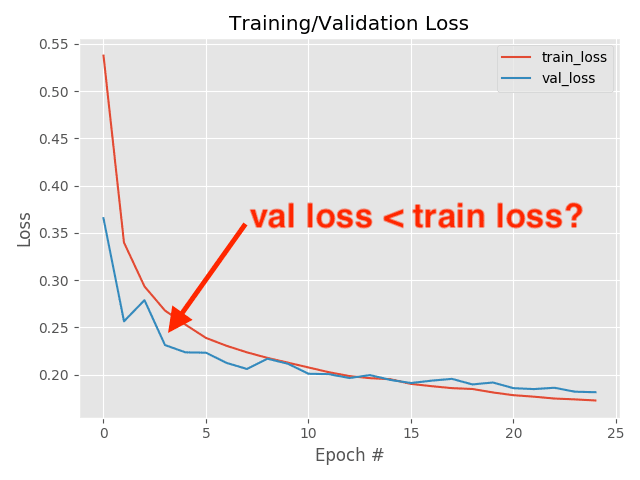

Regularization methods often sacrifice training accuracy to improve validationtesting accuracy in some cases that can lead to your validation loss being lower than your training loss. An iterative approach is one widely used method for reducing loss and is as easy and efficient as walking down a hill. Loss not decreasing Its hard to debug your model with those informations but maybe some of those ideas will help you in some way.

Standardizing and Normalizing the data. 2014 performed multiple. Network is too shallow.

In supervised learning a machine learning algorithm builds a model by examining many examples and attempting to find a model that minimizes loss. Try Alexnet or VGG style to build your network or read examples cifar10 mnist in. Loss not decreasing - Pytorch.

Discover how to train a model using an iterative approach. Add dropout reduce number of layers or number of neurons in each layer. To train a model we need a good way to reduce the models loss.

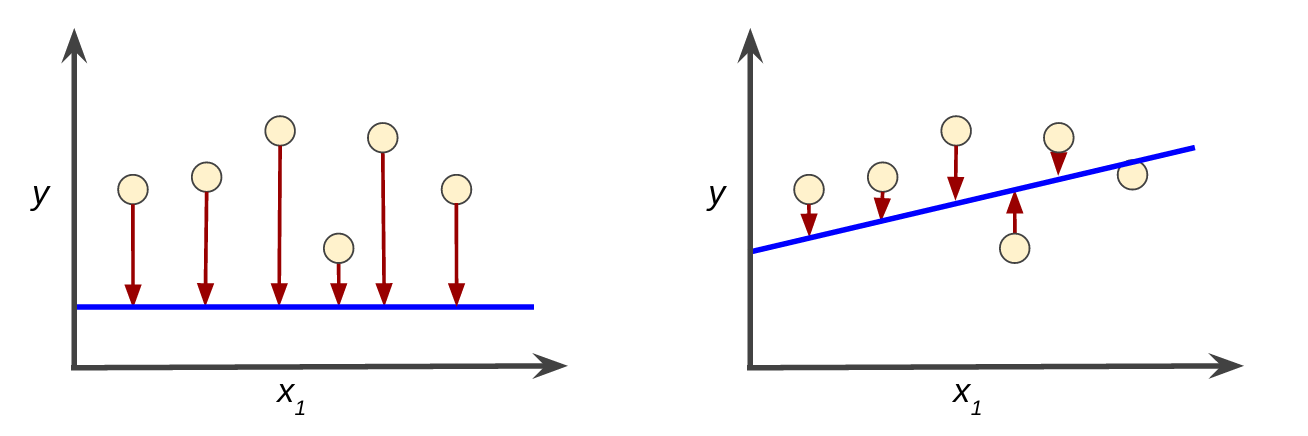

Because your model is changing over time the loss over the first batches of an epoch is generally higher than over the last batches. Try to overfit your network on The model is overfitting right from epoch 10 the validation loss is increasing while the training loss is decreasing. Check if the model is too complex.

Understand full gradient descent and some variants. And ideally to generalize better to the data outside the validation and testing sets. It is not even overfitting on only three training examples.

Remove regularization gradually maybe switch batch norm for a few layers. I never had to get here but if youre using BatchNorm you would expect approximately standard normal distributions. Your model is starting to memorize the training data which reduces its generalization capabilities.

Browse other questions tagged machine-learning conv-neural-network pytorch or ask your own question. With specific datasets neural network can get into local plateau not minima however where it does not escape. This process is called empirical risk.

When both the training loss and the validation decrease the model is said to be underfit. The model is overfitting right from epoch 10 the validation loss is increasing while the training loss is decreasing. Reduce the learning rate a good.

Dealing with such a Model. Ask Question Asked 2 years. What we dont.

Its hard to learn with only a convolutional layer and a fully connected layer. Visualize the distribution of weights and biases for each layer. Decrease in the accuracy as the metric on the validation or test step.

On the other hand the testing loss for an epoch is computed using the model because it is at the tip of the epoch leading to a lower loss. Learning Rate and Decay Rate. What you are facing is over-fitting and it can occur to any machine learning algorithm not only neural nets.

I use your network on cifar10 data loss does not decrease but increase. With activation it can learn something basic. It can still be trained to make better predictions.

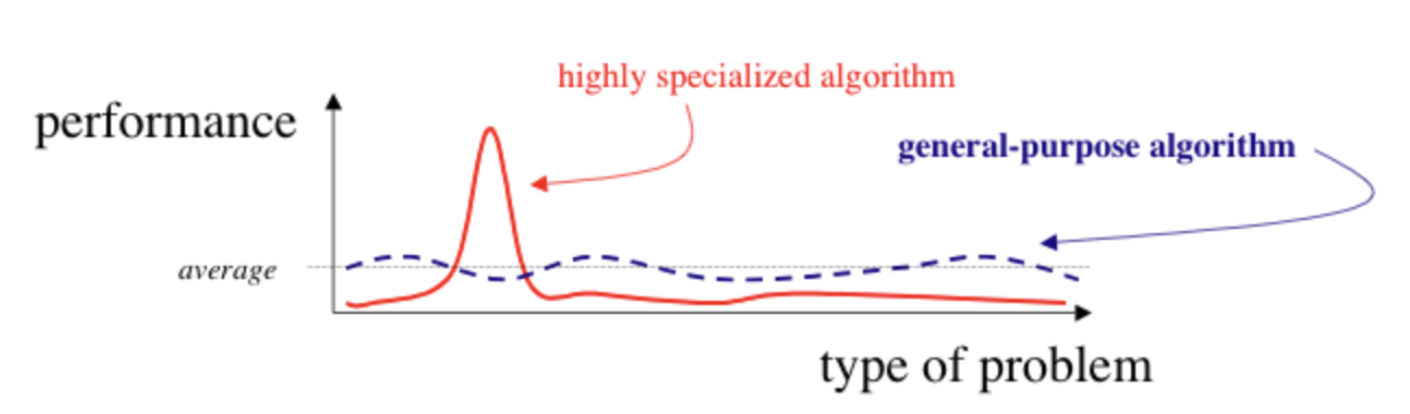

With any improvement in machine learning its nice to have a theoretical improvement but its also important to test whether it really works. This is one of the components of DALL-E but not the entirety of DALL-E. Im not an expert in this area but nonetheless Ill try to provide more context about what was released today.

How To Interpret Loss And Accuracy For A Machine Learning Model Stack Overflow

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

Interpreting Loss Curves Testing And Debugging In Machine Learning

Descending Into Ml Training And Loss Machine Learning Crash Course

Underfitting And Overfitting In Machine Learning

Understanding And Reducing Bias In Machine Learning By Jaspreet Towards Data Science

Loss Function Loss Function In Machine Learning

37 Reasons Why Your Neural Network Is Not Working Slav Machine Learning Book Deep Learning Data Scientist

Deep Learning Has A Size Problem Shifting From State Of The Art Accuracy By Jameson Toole Heartbeat

When Can Validation Accuracy Be Greater Than Training Accuracy For Deep Learning Models

Deep Learning For The Masses And The Semantic Layer Deep Learning Machine Learning Deep Learning Learning

Validation Loss Is Not Decreasing Data Science Stack Exchange

Twitter Artificial Neural Network Data Science Artificial Intelligence

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

Reducing Loss Learning Rate Machine Learning Crash Course

Gradient Descent In Practice Ii Learning Rate Coursera Machine Learning Learning Online Learning

Get Started With Pytorch Learn How To Build Quick Accurate Neural Networks With 4 Case Studies Deep Learning Learning Style Learning

Reducing Loss Learning Rate Machine Learning Crash Course

Post a Comment for "Machine Learning Loss Not Decreasing"