Gradient Descent Trong Machine Learning

Gradient descent is an optimization algorithm thats used when training a machine learning model. Gradient descent is not only up to linear regression but it is an algorithm that can be applied on any machine learning part including linear regression logistic regression and it is the complete backbone of deep learning.

Optimizer Deep Understanding Of Optimization Algorithms Gd Sgd Adam Trang Chủ

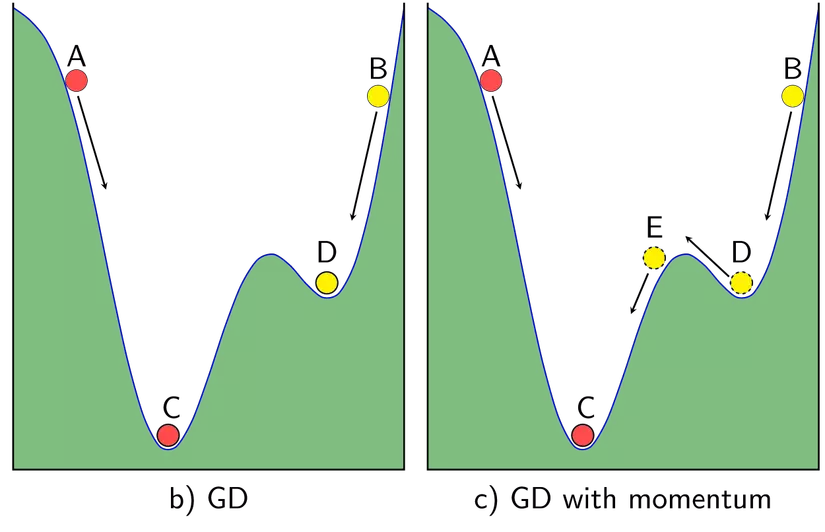

Its based on a convex function and tweaks its parameters iteratively to minimize a given function to its local minimum.

Gradient descent trong machine learning. Nếu như nói Gradient Descent là cốt lõi của các thuật toán Machine Learning thì đạo hàm chính là cốt lõi của Gradient Descent. These parameters refer to coefficients in Linear Regression and weights in Neural Network. Sgd is an instance of the stochastic gradient descent optimizer with a learning rate of 01 and a momentum of 09.

1 day agoSo the new technique came as Gradient Descent which finds the minimum very fastly. Vậy nên ở bài này chúng ta sẽ tìm hiểu một trong những thuật toán phổ biến trong Machine Learning chính là Gradient Descent. Whenever the question comes to train data models gradient descent is joined with other algorithms and ease to implement and understand.

Optimization is a big part of machine learning. Almost every machine learning algorithm has an optimisation algorithm at its core that wants to minimize its cost function. Gradient descent is a simple optimization procedure that you can use with many machine learning algorithms.

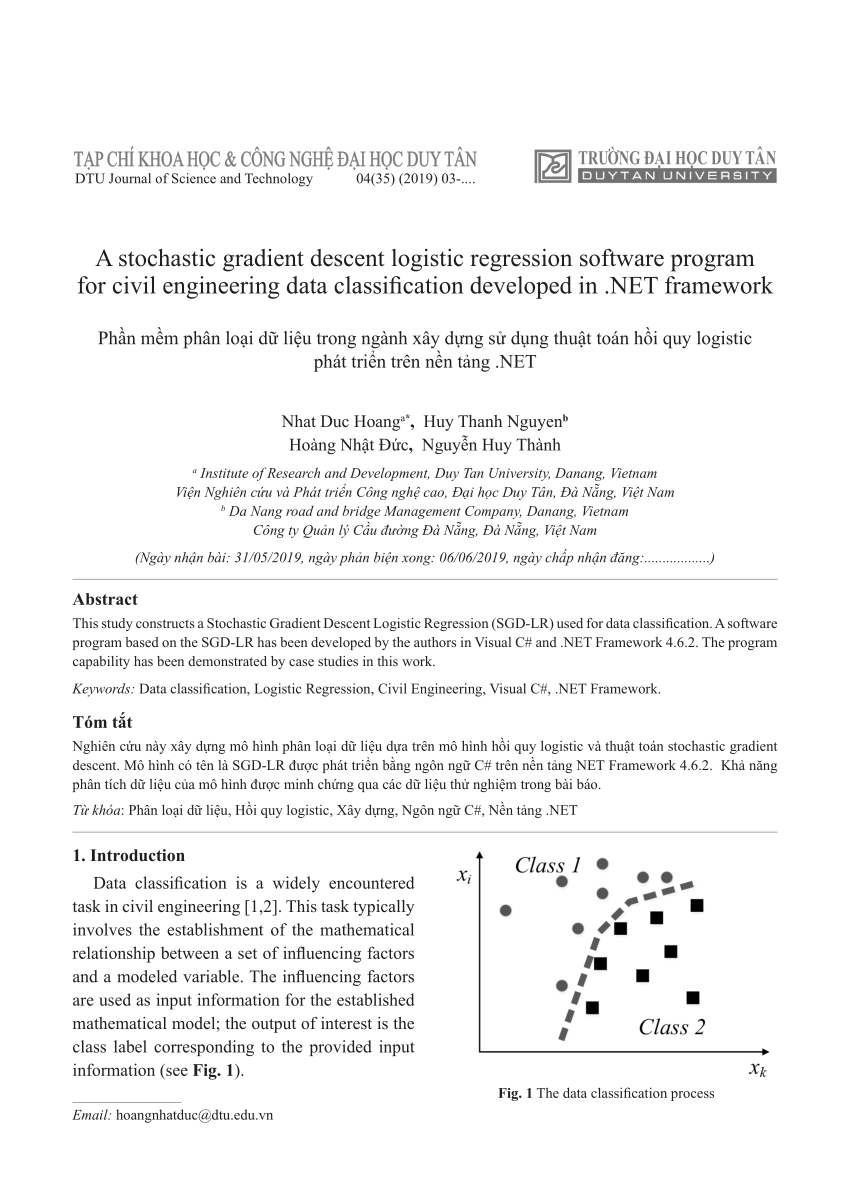

During this post will explain about machine learning ML concepts ie. Gradient Descent and Cost functionIn logistic regression for binary classification we can consider an example for a simple image classifier that takes images as input and predict the probability of them belonging to a specific category. Gradient Descent is the most widely used optimization strategy in machine learning and deep learning.

While backpropagating in the network the weights and biases are updated. A downhill movement is made by first calculating how far to move in the input space calculated as the step size called alpha or the learning rate multiplied by the gradient. Training data helps these models learn over time and the cost function within gradient descent specifically acts as a barometer gauging its accuracy with each iteration of parameter updates.

Each of these components could be simple or complex but at a bare minimum you will need all four when creating a custom training loop for your own models. Gradient descent is an optimization algorithm which is commonly-used to train machine learning models and neural networks. The intuition behind Gradient Descent.

What is gradient descent. What is Gradient Descent. Về cơ bản đó chính là lượng y sẽ thay đổi theo khi ta thay đổi x hay còn được biết đến.

In this article we will learn why there is a need for such an optimization technique what is gradient descent optimization and at the end we will see how does it work with the regression model. Do đó đây sẽ là kiến thức được nhắc đến xuyên suốt trong máy học và đặc biệt là trong Gradient Descent. Optimization in machine learning is the process of updating weights and biases in the model to minimize the models overall loss.

Gradient Descent là một thuật toán tối ưu lặp iterative optimization algorithm được sử dụng trong các bài toán Machine Learning và Deep Learning thường là các bài toán tối ưu lồi Convex Optimization với mục tiêu là tìm một tập các biến. Gradient Descent in Machine Learning Optimisation is an important part of machine learning and deep learning. X x step_size f x.

Gradient Descent is an optimization algorithm for finding a local minimum of a differentiable function. Phần này mình tham khảo tài liệu trên Wikipedia. This is then subtracted from the current point ensuring we move against the gradient or down the target function.

Batch gradient descent refers to calculating the derivative from all training data before calculating an update. Gradient Descent is an optimization algorithm commonly used in machine learning to optimize a Cost Function or Error Function by updating the parameters of our models.

When we fit a line with a Linear Regression we optimise the intercept and the slope.

Suy Giảm độ Dốc Wikipedia Tiếng Việt

Gradient Descent Phần 1 Gradient Descent La Gi Ai Club Tutorials

Understanding Rmsprop Faster Neural Network Learning By Vitaly Bushaev Towards Data Science

Thuật Toan Tối ưu Gradient Descent By Vicohub Medium

Stochastic Gradient Descent Sgd Ppt Download

Ryota Tomioka Msr Summer School 2 July Ppt Download

Bai 7 Thuật Toan Gradient Descent Dat Hoang S Blog

Thuật Toan Gradient Descent All Laravel

Nhập Mon Machine Learning Bai 5 Gradient Descent

Stochastic Gradient Descent Sgd Ppt Download

Thuật Toan Tối ưu Gradient Descent By Vicohub Medium

O Machine Learning Vanishing Va Exploding Gradient Trong Neural Network

Stochastic Gradient Descent Sgd Ppt Download

Pdf A Stochastic Gradient Descent Logistic Regression Software Program For Civil Engineering Data Classification Developed In Net Framework

Thuật Toan Tối ưu Gradient Descent By Vicohub Medium

Gradient Descent Phần 1 Gradient Descent La Gi Ai Club Tutorials

Sorting Algorithms Visualization Pinterest

Post a Comment for "Gradient Descent Trong Machine Learning"