Machine Learning Loss Function Differentiable

With a stochastic gradient descent it should even matter less IMHO. But heuristically it works quite well with other optimizers Levenberg-Marq etc in particular when the trimmed fraction is small.

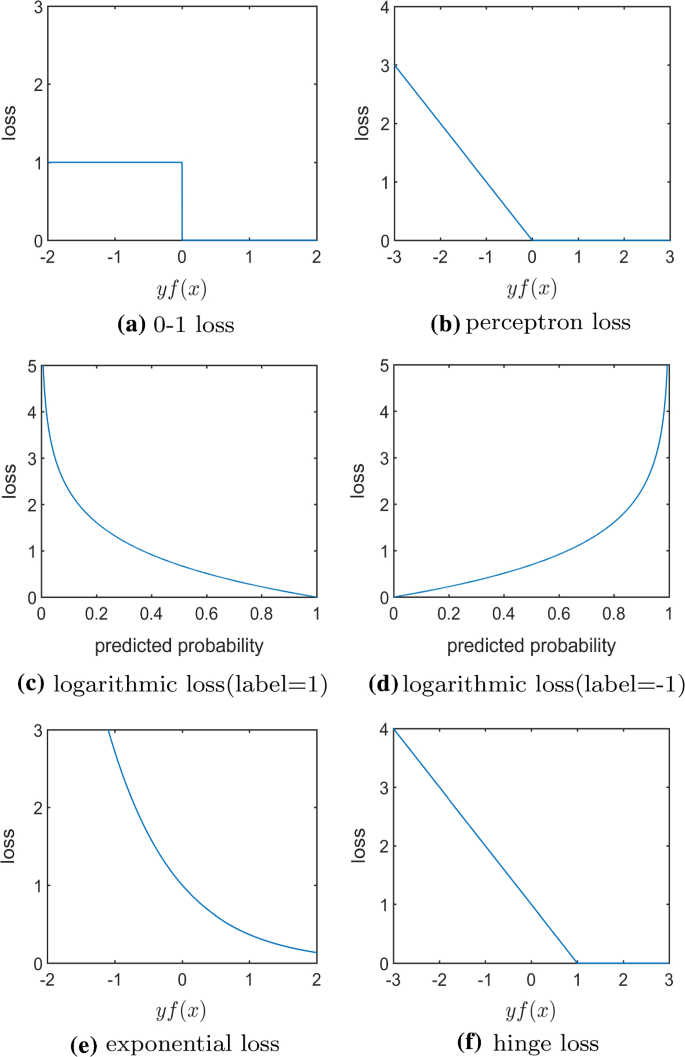

2 Visualization Of Typical Loss Functions Used In Machine Learning Download Scientific Diagram

Viewed 18 times 0 begingroup I have a.

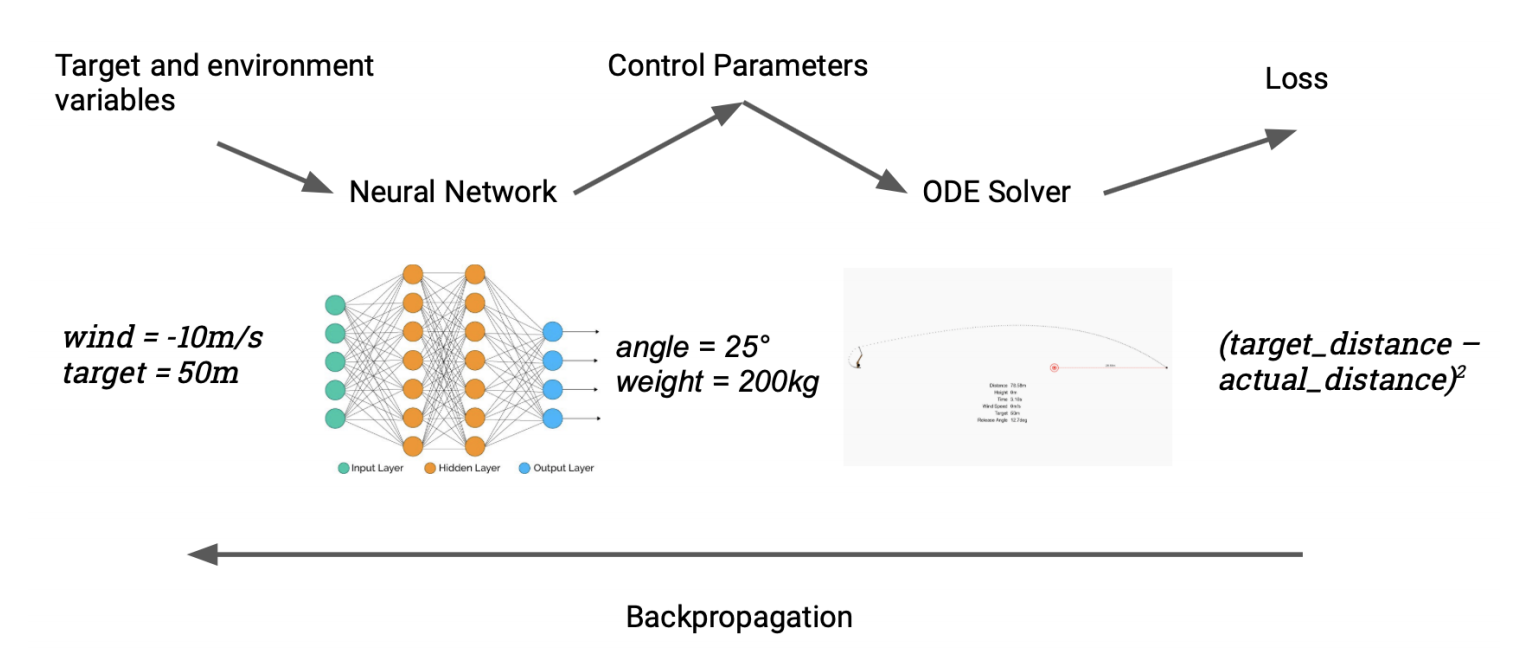

Machine learning loss function differentiable. Computing the gradient requires a differentiable loss function so you cannot train a network with backpropagation if your loss function is not differentiable. Instead of policy functions or value functions we can combine the best of both worlds. We show that this regular-ization is particularly well suited to average and cluster time series under the DTW geometry a.

Value Functions Considering Actions. In this paper that soft-DTW is a differentiable loss function and that both its value and gradi-ent can be computed with quadratic timespace complexity DTW has quadratic time but linear space complexity. 1 Is there any other differentiable loss function that can be applied in ranking problem with regression model.

2 Can rank function be approximated by a differentiable function. This doesnt have a derivative so you cant use it. Here is a source discussing some methods for direct optimization of the 0-1 loss.

Instead use cross entropy. Yes certainly strictly it is not differentiable. Hello Machine learning fellasrecently during this lockdown period while I was visiting back the basic concepts of ML I gained a better intuition perspective on some very subtle concepts.

Ask Question Asked 1 month ago. This function is differentiable but also hard to train. We propose in this paper a differentiable learning loss between time series building upon the celebrated dynamic time warping DTW discrepancy.

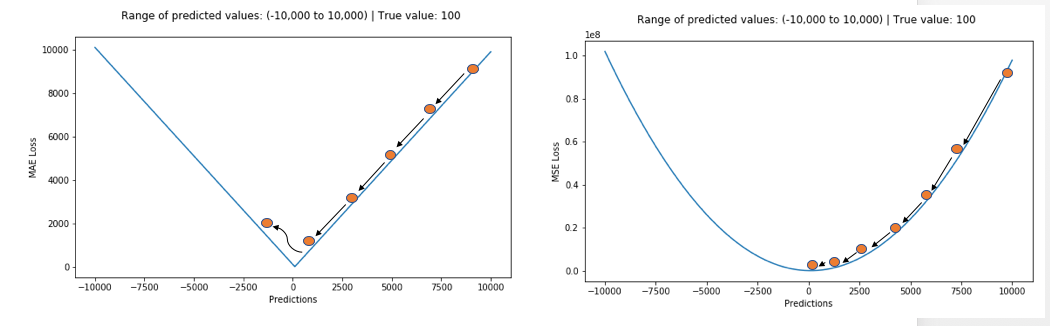

A Q-Function is a function that evaluates state-action pairs. The mean squared error function is widely used as it is simple continuous and differentiable. In general theres no law of the universe that says losses must be convex or differentiable.

Its a method of evaluating how well specific algorithm models the given data. A Q-Function learns the value of a state-action pair and estimates it to be the reward plus the estimate of the value of the future state. Unlike the Euclidean distance DTW can compare time series of variable size and is robust to shifts or dilatations across the time dimension.

It seems like youre trying to measure some sort of 1-accuracy eg the proportion of incorrectly labeled samples. Cross-entropy mean-squared-error logistic etc are functions that wrap around the true loss value to give a surrogate or approximate loss which is differentiable. Ideal Loss Function for Non-Differentiable Function.

In the context of general machine learning the primary reason 0-1 loss is seldom used is that 0-1 loss is not a convex loss function and also is not differentiable at 0. The algorithm searches for optimal weights by making small adjustments in the direction opposite the gradient. Machines learn by means of a loss function.

To compute DTW one typically solves a minimal-cost alignment problem between two. It turns out to be NP-hard to solve a problem exactly with regard to 0-1 loss. Gradually with the help of some optimization function loss function learns to reduce the error in prediction.

C can be ignored if set to 1 or as is commonly done in machine learning set to ½ to give the quadratic loss a nice differentiable form. It may be impossible to implement the function you give robust mean through loss minimization heres an outline of proof that its impossible to have a loss function that recovers the mean of smallest n-1 datapoints in a sample of size n and is differentiable on a dense subset of its domain. Same technique could show that you cant recover the mean of middle n-2 points and I think this could be extended to continuous loss functions.

Applications of Loss Functions Loss functions are used in optimization problems with the goal of minimizing the loss. Browse other questions tagged machine-learning loss-functions evolutionary-algorithms or ask your own question. The MSE is calculated by the sum of the squared distance between the target variable yi and its predicted value yip.

Active 1 month ago. However there are other optimization algorithms you can try. If predictions deviates too much from actual results loss function would cough up a very large number.

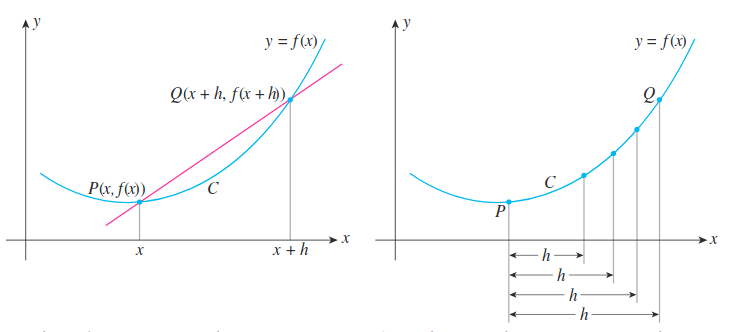

The most common loss function for regression problems is the mean squared error MSE. A loss function must be differentiable to perform gradient descent. This principle is also used when considering smooth activation functions for neural networks and allows us.

Non-convex losses might cause problems with the optimizer falling into local minima and these problems may or may not be surmountable with tricks like random restarts.

Derivatives Differentiability And Loss Functions

What Does It Mean When A Deep Learning Model Is Described As Being Fully Differentiable Quora

The Function S A Z Is A Soft Differentiable Approximation Of The Download Scientific Diagram

Non Smooth And Non Differentiable Customized Loss Function Tensorflow Stack Overflow

A Comprehensive Survey Of Loss Functions In Machine Learning Springerlink

10 Empirical Risk Minimization

5 Regression Loss Functions All Machine Learners Should Know By Prince Grover Heartbeat

Non Differentiable Hinge Loss Dashed And Differentiable Huber Loss Download Scientific Diagram

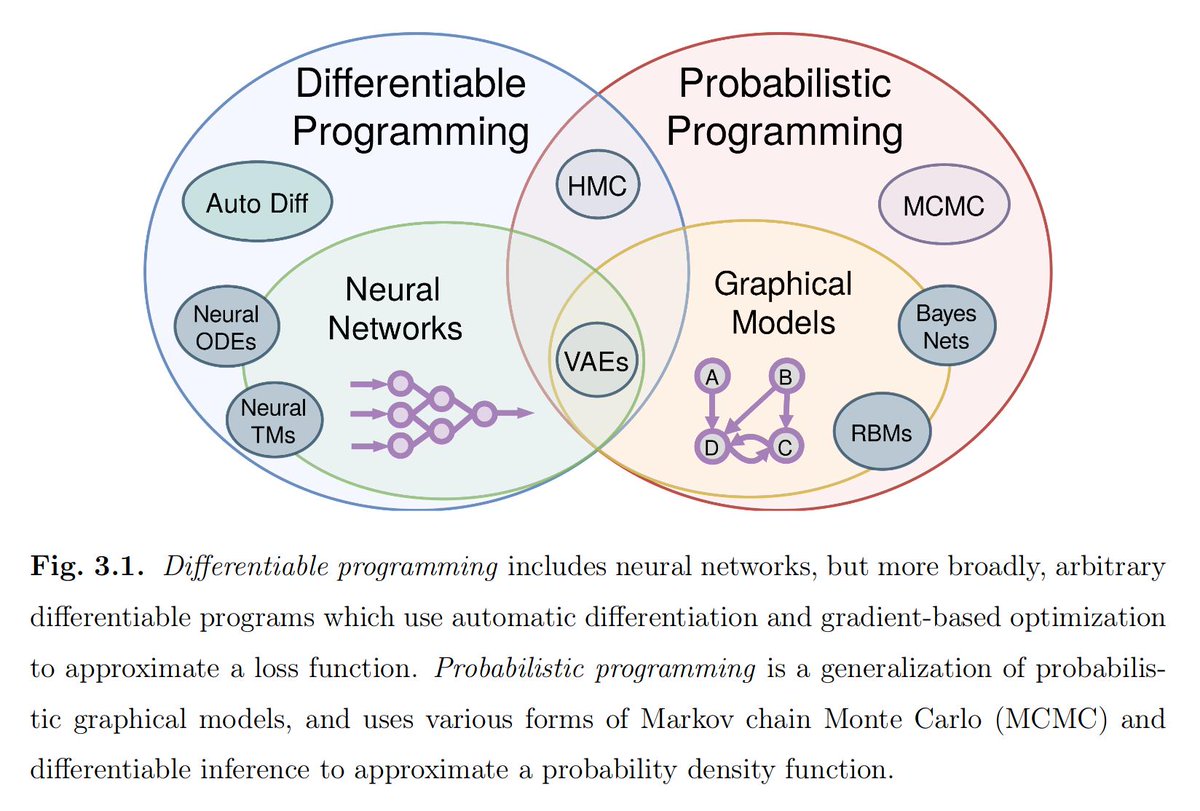

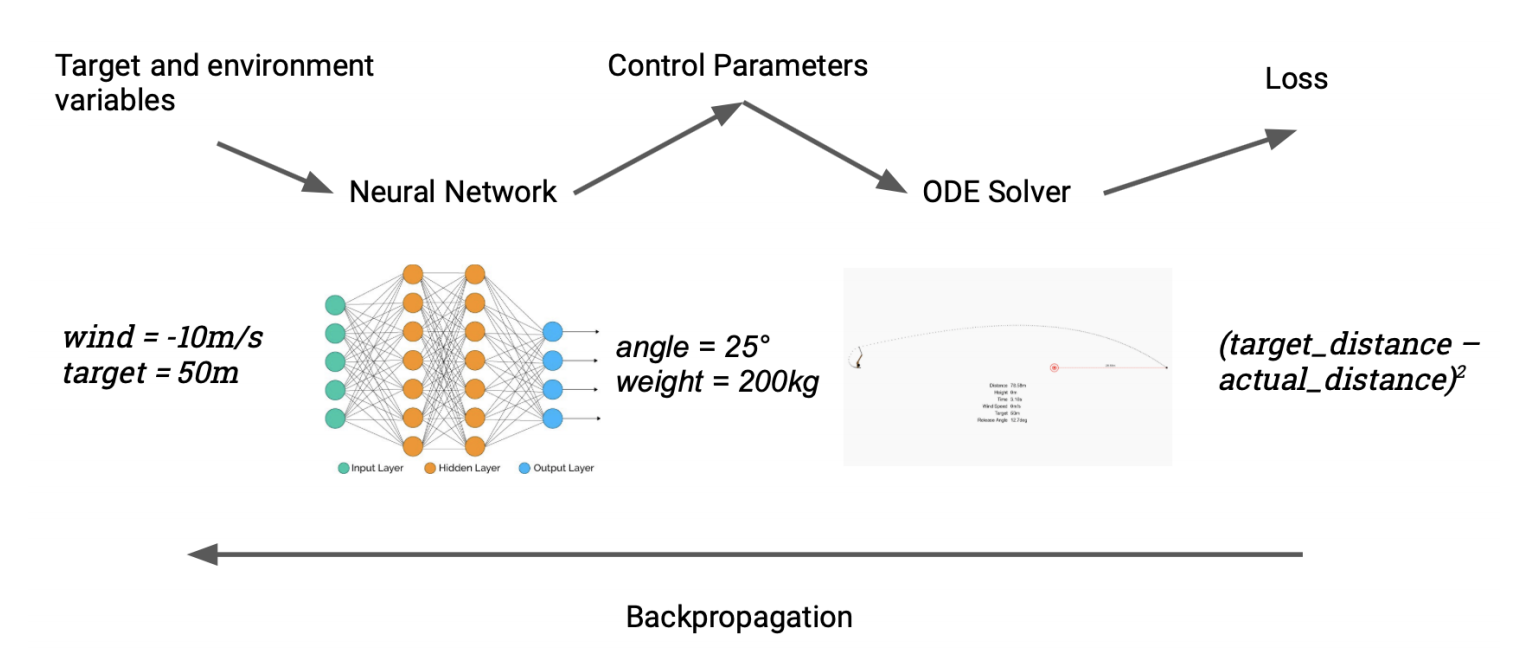

Reinforcement Learning Vs Differentiable Programming By Odsc Open Data Science Medium

10 Empirical Risk Minimization

Comp 61011 Machine Learning Support Vector Machines Reminder

A Beginner S Guide To Differentiable Programming Pathmind

Reinforcement Learning Vs Differentiable Programming By Odsc Open Data Science Medium

Asymmetric Cost Function In Neural Networks Cross Validated

![]()

Blog Machine Learning Loss Functions Evergreen Innovations Energy Storage Renewable Energy Innovation

Differentiable Loss Function Part 2 2017 Deep Learning Course Forums

Do Support Vector Machines Beat Deep Learning Towards Data Science

Julia Computing Mit Introduce Differentiable Programming System Bridging Ai And Science By Synced Syncedreview Medium

Post a Comment for "Machine Learning Loss Function Differentiable"